Matrix Computations

David Gleich

Purdue University

Fall 2024

Course number CS-51500

Tuesday-Thursday 10:30-11:45pm.

Readings and topics

Main reference

- MM&P

- Matrix Methods and Programs. David F. Gleich

- DW

- Numerical Linear Algebra with Julia. Eric Darve and Mary Wootters.

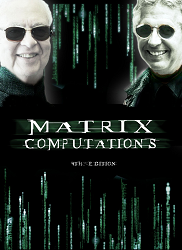

- GvL

- Matrix computations 4th edition. Johns Hopkins University Press. Gene H. Golub and Charles F. Van Loan

Other references

- Demmel

- James Demmel, Applied Numerical Linear Algebra, 1997

- Trefethen

- Lloyd N. Trefethen and David Bau [Numerical Linear Algebra], 1997

- Saad

- Saad, Iterative Methods for Sparse Linear Systems, 2nd edition, SIAM 2003

Lecture 30

We finished up on bipartite matrices, then did a brief tour of randomized algorithms (see the Julia code), then did a review for the final (see slides!)

- Notes

- Lecture 30

- Reading

- Final review

- Julia

- Randomized Matrix Methods (html) (jl) (ipynb)

- Videos

- Lecture 30a - Bipartite Matrices

- Lecture 30b - Randomized Algorithms

- Lecture 30c - List of topics

Lecture 29

Algorithms for the SVD and Bipartite Matrices (really, matrices with Property A that are consistently ordered).

- Notes

- Lecture 29

- Reading

- Singular Value Algorithms (not complete) (MM&P - 30)

- Julia

- Bipartite Matrices (html) (jl) (ipynb)

- Videos

- Lecture 29a - Two Matrices for Computing SVDs

- Lecture 29b - SVD Algorithms

Lecture 28

The equivalence between QR and subspace iteration, tridiagonal reduction, and a brief introduction to sparse eigenvalue algorithms.

- Notes

- Lecture 28

- Reading

- Trefethen and Bau - Lecture 28

- MM&P - 29

- Julia

- Tridiagonal Reduction (html) (jl) (ipynb)

- Sparse Eigenvalues (html) (jl) (ipynb)

- Videos

- Lecture 28a - Subspace and QR

- Lecture 28b - Improving QR for Eigenvalues

- Lecture 28c - Tridiagonal Reduction for Eigenvalues

- Lecture 28d - ARPACK (Quick lecture)

Lecture 27

We discussed the multi-grid preconditioner and then started back into eigenvalue algorithms. We did most of the work on looking at simple eigenvalue relationships and how to transform the spectrum of a matrix to get the power method to converge to other eigenvalues. But then we pointed out that you can also deflate eigenvalues.

- Notes

- Eigenvalue Algorithms (not complete) (MM&P - 28, 29)

- Lecture 27

- Reading

- Trefethen and Bau - Lecture 28

- Julia

- Eigenvalue Algorithms (html) (jl) (ipynb)

- Videos

- Lecture 27a - Multigrid

- Lecture 27b - Eigenvalue Algorithms

Lecture 26

We discussed the types and examples of preconditioners.

- Notes

- Lecture 26

- Reading

-

- Preconditioning A discussion

- on types and examples of preconditioners. (MM&P - 27)

- Saad Section 10.3

- GvL, 11.5

- Tref Lecture 40

- Videos

- Lecture 26a - Preconditioning Intro

- Lecture 26b - Preconditioning Goal

- Lecture 26c - Preconditioning Examples

Lecture 25

We had a lecture on using the structure in GMRES and also doing CG via orthogonal polynomials; see the videos on blackboard for more information on these ideas too.

- Notes

- Lecture 25

- Reading

- Efficient GMRES Notes (MM&P - 25)

-

- Orthogonal Polynomials A discussion

- on orthogonal polynomials and how to derive CG via them! (MM&P - 24)

- Templates for the solution of linear systems

- Saad Section 6.4

- Saad Section 6.5

- Tref Lecture 35

- Julia

- Efficient GMRES (html) (jl) (ipynb)

- Orthogonal Polynomials (html) (jl) (ipynb)

- Videos

- Lecture 25a - GMRES Make sure to have the PDF notes handy while watching this!

- Lecture 25b - Orthogonal Polynomials

- Lecture 25 - 2017 - Orthogonal Polynomials

Lecture 24

We finished our derivation of the efficient CG method based on updating the Cholesky factorization.

- Notes

- Lecture 24

- Reading

- Conjugate Gradient (MM&P - 23)

- Videos

- Lecture 24a - Conjugate Gradient Overview

- Lecture 24b - CG Results Make sure to have the PDF notes handy while watching this!

Lecture 23

We saw that the Lanczos process follows from the Arnoldi process on a symmetric matrix. We also saw that the Arnoldi vectors span the Krylov subspace. Then we briefly introduced CG.

- Notes

- Lecture 23

- Julia

- Arnoldi and Lanczos (html) (jl) (ipynb)

- A simple CG (html) (jl) (ipynb)

- Reading

- MM&P - 22

- Videos

- Lecture 23a - Arnoldi and Lanczos in Julia

- Lecture 23b - Lanczos Proofs

- Lecture 23c - Krylov Methods

Lecture 22

We showed that the Krylov subspace must contain the solution if it stops growing. Then we also stated the Arnoldi process.

- Notes

- Lecture 22

- Julia

- Simple Krylov Subspaces are Ill-conditioned (html) (jl) (ipynb)

- Reading

- Krylov methods (MM&P - 21, 22)

- Krylov methods 2022

- Conjugate Gradient

- Saad Section 6.3, 6.5

- Trefethen and Bau, Lecture 33, 35

- GvL Algorithm 10.1.1

- Videos

- Lecture 22a - Krylov Subspace

- Lecture 22b - From Krylov to Arnoldi

Lecture 21

We started working on Krylov methods after seeing some examples of matrix functions for graphs.

- Notes

- Lecture 21

- Julia

- Linear Discriminant Analysis (html) (jl) (ipynb)

- Reading

- Krylov methods (MM&P - 21)

- Krylov methods 2022

- Saad Section 6.5

- Trefethen and Bau, Lecture 35

- Videos

- Lecture 21a - Matrix Functions and Graphs

- Lecture 21b - Generalized Eigenvectors

- Lecture 21c - Krylov Methods Intro

Lecture 20

We discussed more advanced versions of some of the problems we saw in class. This includes sequences of linear systems, rank-1 updates, functions of a matrix, and generalized eigenvalues.

- Notes

- Lecture 20

- Reading

- Advanced problems (MM&P - 26, 27)

- Sherman-Morrison-Woodbury Formula

- GvL 9.1 - Functions of a matrix

- GvL 8.7 - Generalized eigenvalue decomposition

- GvL 2.1.5 - Update formula

- Videos

- Lecture 20a - Multiple Righthand Sides

- Lecture 20b - Rank 1 updates

- Lecture 20c - Matrix Functions

Lecture 19

We finished up talking about backwards stability and the issues with variance. Then we talked about cases where I've encountered floating point issues. In solving quadratic equations, in the Hurwitz Zeta function, and in the PageRank linear system. Then we dove into the outline for the proof that Gaussian elimiantion with partial pivoting is backwards stable. We did not get through this, but you should read it on your own!

- Reading

- Error analysis of LU (MM&P - 20)

- PageRank (in Analysis notes)

- Notes

- Lecture 19

- Julia

- Accurate PageRank versions (html) (jl) (ipynb)

- Videos

- Lecture 19a - Backwards Stability

- Lecture 19b - Quadratic Accuracy

- Lecture 19c - PageRank

- Lecture - LU is Backwards Stable

Lecture 18

We investigated how computers store floating point numbers in the IEEE format.

This then led to an understanding of the guarantees they make about basis arithmetic operations. We used this to understand why some implementations of codes are better than others, which gives rise to the idea of a backwards stable algorithm.

- Notes

- Lecture 18

- Julia

- Floating Point systems (html) (jl) (ipynb)

- Variance computations (html) (jl) (ipynb) (Covered in Lecture 19)

- Reading

- Tref Lecture 13, 14, 17

- Golub and van Loan, sections 2.7, 3.1, 3.3.2

- Numerical stability

- Floating-point

- Backwards Stability (in Analysis notes) (MM&P - 19)

- Videos

- Lecture 18a - Steepest Descent Analysis

- Lecture 18b - IEEE Floats

- Lecture 18c - Backwards Error

Lecture 17

We saw a lot today! The goal of the class was to establish kappa(A) as a fundamental quantity. This involved: 2. the SVD of a matrix exists. 4. ||A||_2 is orthogonally invariant. 5. ||A||_2 = sigma_max. This follows from orthogonal invariance. 6. ||A^{-1}||_2 = 1/sigma_min. This again follows from orthogonal invariance. 3. the SVD is the eigenvalue decomposition for sym. pos. def matrices. 7. ||A||_2, ||A||^{-1}_2 = lambda_max and 1/\lambda_min for sym. pos. def.

Then we showed that Richardson converges at a rate that depend on kappa(A) the 2-norm condition number.

- Notes

- Lecture 17

- Reading

- The condition number as a fundamental quantity (MM&P 17, 18)

- GvL 2.4 (and other parts of Chapter 2)

- GvL 11.3.2.

- Videos

- Lecture 17a - Conditioning-

- Lecture 17b - The SVD

- Lecture 17c - 2-norm Condition Number

Lecture 16

We started by going over the next half of class. Then we started analyzing algorithms for linear systems by counting flops for LU, although this count was not optimal. We saw two problems that Julia can't solve (see below) that deal with conditioning and stability. Then we moved into a discussion about conditioning. A problem's conditioning just tells us how sensitive the problem is to changes in the input. We wouldn't expect to accurately solve a problem that is highly sensitive to changes in the input on a computer. Each problem has a condition number, and there is a number that arises so frequently that we call it the condition number of a matrix. We first established what the condition number of a matrix is.

- Notes

- Lecture 16

- Julia

- A linear system that Julia can't solve (html) (jl) (ipynb) Note that if you perturb this system by eps(1.0)*randn(60,60), then it can!

- The Hilbert Linear System (that Julia can't solve) (html) (jl) (ipynb)

- Reading

- Analyzing matrix computations (MM&P - 16, 17)

- After midterm outline

- Tref Lecture 13, 14, 17

- Golub and van Loan, sections 2.7, 3.1, 3.3.2

- Numerical stability

- Videos

- Lecture 16a - FLOP Counts

- Lecture 16b - Bad solutions to linear systems

- Lecture 16c - Conditioning Intro

Lecture 15

We went over Givens and Householder for QR, and then went through the midterm.

- Notes

- Lecture 15

- Julia

- QR for Least Squares (html) (jl) (ipynb)

- Reading

- QR and Least Squares (MM&P - 15)

- Trefethen and Bau, Lecture 8

- Trefethen and Bau, Lecture 11

- Golub and van Loan, section 5.5

- Videos

- Lecture 14a - Givens and QR

- Lecture 14b - Householder

Lecture 13

We saw how to derive the QR factorization of a matrix as what arises with Gram Schmidt.

- Notes

- Lecture 13

- Julia

- QR for Least Squares (html) (jl) (ipynb) (Not covered)

- Reading

- QR and Least Squares (MM&P - 15)

- Midterm Review

- Trefethen and Bau, Lecture 8

- Trefethen and Bau, Lecture 11

- Golub and van Loan, section 5.5

- Variable Elimination and Least Squares (MM&P - 14)

- Videos

- Lecture 13a - QR Factorization

- Lecture 13b - Review (Optional for 2020!)

Lecture 12

We saw pivoting in variable elimination to ensure that it always works and also how to do variable elimination for least squares.

- Notes

- Lecture 12

- Julia

- Pivoting in LU (html) (jl) (ipynb)

- Elimination in Least Squares (html) (jl) (ipynb)

- Reading

- GvL 3.2, 3.4

- GvL 4.2

- Tref Lecture 20, 21

- Golub and van Loan, section 5.1.8, 5.2.5, 5.3

- Trefethen and Bau, Lecture 11

- Videos

- Lecture 12a - LU Recap

- Lecture 12b - Pivoted LU

- Lecture 12c - Finite Least Squares

Lecture 11

We saw variable elimination and how it gives rise to the LU factorization of a matrix.

- Notes

- Lecture 11

- Julia

- Variable Elimination (html) (jl) (ipynb)

- Reading

- Elimination (MM&P - 11)

- GvL 3.2, 3.4

- GvL 4.2

- Tref Lecture 20, 21

- Videos

- Lecture 11a - Power Method Analysis

- Lecture 11b - Finite Algorithms and LU

Lecture 10

We saw that Gauss-Seidel is equivalent to Steepest Descent on symmetric matrices with unit diagonal and some intro material on eigenvalue algorithms.

- Notes

- Lecture 10

- Reading

- Gauss-Seidel and Jacobi (MM&P - 9)

- Eigenvalues and the Power Method (MM&P - 10)

- Golub and van Loan, section 7.3

- Saad Chapter 4

- Julia

- Eigenvectors for Phase Retrieval (html) (jl) (ipynb)

- Eigenvalue Algorithms (html) (jl) (ipynb) Shown in Lecture 11

- Videos

- Lecture 10a - Gauss Seidel and Coordinate Descent

- Lecture 10b - Eigenvalues and the Power Method

Lecture 9

We saw the Jacobi and Gauss-Seidel iterations today.

- Notes

- Lecture 9

- Readings

- Gauss-Seidel and Jacobi (MM&P - 9)

- Golub and van Loan, section 11.2

-

- Saad Chapter 5

- Julia

- Jacobi and Gauss Seidel (html) (jl) (ipynb)

- Videos

- Lecture 9a - Jacobi Code

- Lecture 9b - Jacobi Analysis

- Lecture 9c - Jacobi Typo and Gauss Seidel

Lecture 8

We finished our work on the steepest descent algorithm and moved into the coordinate descent algorithm.

- Notes

- Lecture 8

- Readings

- Steepest descent (MM&P - 8)

- Julia

- Steepest descent and Ax=b (html) (jl) (ipynb)

- Videos

- Lecture 8a - Quadratic functions

- Lecture 8b - Optimization and Gradient Descent

- Lecture 8c - Coordinate Descent

Lecture 7

We did a bit on norms, then went back to show how to use the Richardson method to give us a way of solving any system of linear equations or least squares problem. Then we began to study a quadratic function that will give us another way to solve linear systems.

- Notes

- Lecture 7

- Reading

- Aside on norms (MM&P - 6)

- Simple Matrix Algorithms (MM&P - 7)

- GvL Section 2.3, 2.4

- Tref Lecture 3, 4

- Julia

- Quadratic equations and Ax=b (html) (jl) (ipynb)

- Norms (html) (jl) (ipynb)

- Videos

- Lecture 7a - Norms

- Lecture 7b - Richardson

- Lecture 7c - Gradient Descent

Lecture 6

We saw a number of derivations of the same type of algorithm called Richardson's method, based on the Neumann series. (We'll see more in the next class too!) We also saw how to check the solution of a linear system of equations, and saw norms.

- Notes

- Lecture 6

- Reading

- Simple Matrix Algorithms (MM&P - 7)

- Other reading https://en.wikipedia.org/wiki/Modified_Richardson_iteration

- Aside on norms (MM&P - 6)

- GvL Section 2.3, 2.4

- Tref Lecture 3, 4

- Notes on how to prove that norms are equivalent by Steven Johnson

- Julia

- Neumann series solver (html) (jl) (ipynb)

- Norms (not covered in class) (html) (jl) (ipynb)

- Videos

- Lecture 6a - Neumann Series

- Lecture 6b - Residual and Error

- Lecture 6c - Neumann Algorithm

- Lecture 6d - Norms

Lecture 5

We saw CSC and CSR formats as well as the Neumann series method to build the "inverse" of a matrix (but you shouldn't do that).

- Notes

- Lecture 5

- Reading

- Sparse Matrix Operations (MM&P - 4)

- Simple Matrix Algorithms (MM&P - 7)

- Julia

- Operations with sparse matrices in Julia (html) (jl) (ipynb)

- Videos

- Lecture 5a

- Lecture 5b

- Lecture 5c

Lecture 4

(Video recorded from 2018 )

We looked at structure in matrices: Symmetric positive (semi) definite, Orthogonal, M-matrix, Triangular, Hessenberg.

Then we saw how you could model the game of Candyland as a matrix problem. We used this as an example to study how to actually use sparse matrix operations in Julia.

- Notes

- Lecture 4 (From 2018 as well...)

- Reading

- Structure in matrices (MM&P - 3)

- Sparse Matrix Operations (MM&P - 5)

- Matrix structure (pdf)

- Julia

- Operations with sparse matrices in Julia (html) (jl) (ipynb)

- This uses data files candyland-matrix.csv candyland-coords.csv candyland-cells.csv

- Videos

- Lecture 4a

- Lecture 4b

Lecture 3

We looked at structure in matrices: Hankel, Toeplitz, Circulant, Sparse.

- Notes

- Lecture 3

- Reading

- Structure in matrices (MM&P - 3)

- Matrix structure (pdf)

- Julia

- Examples of Matrices and Least Squares (html) (jl) (ipynb)

- General reading on sparse matrices

-

- Direct methods for sparse linear systems (Chapter 2) this is

- probably the best introduction to sparse matrix operations

- [Direct link to Chapter 2]((http://epubs.siam.org.ezproxy.lib.purdue.edu/doi/pdf/10.1137/1.9780898718881.ch2)

- Saad Chapter 3

- Videos

- Lecture 3a

- Lecture 3b

Lecture 2

We started introducing our notation and discussed what a Matrix is, along with the three fundamental problems of linear algebra.

- Notes

- Lecture 2

- Reading

- What is a matrix (MM&P - 1)

- Course notation (pdf) (MM&P - 2)

- Matrix structure (pdf) (MM&P - 3)

- Matrices and linear algebra (pdf)

- Viral spreading (MM&P - 4)

- Julia

- Examples of Matrices and Least Squares (2019) (html) (jl) (ipynb)

- Examples of Matrices and Least Squares (2018) (html) (jl) (ipynb)

- Viral Spreading (2020) (html) (jl) (ipynb)

- Videos

- Lecture - Viral Spreading

- Lecture 2a

- Lecture 2b

- Lecture 2c

Lecture 1

We introduced the class and reviewed the syllabus.

- Readings

- Syllabus

- Slides

- 2024 Intro Slides on Flipped Classroom

- Lecture 1

- Video

- Lecture 1a

In-class discussion periods

- Lecture 1

- 2024-Lecture 1

- Intro to Julia (html) (jl) (ipynb)

- Lecture 2

- Notes for Lecture 2

- 2024-Lecture 2

- Objects in Julia (html) (jl) (ipynb)

- Least squares example (html) (jl) (ipynb)

- Julia packages (html) (jl) (ipynb)

- Lecture 3

- Notes for Lecture 3

- 2024-Lecture 3

- Sparse vs. Dense in Julia (html) (jl) (ipynb)

- Lecture 4

- Notes for Lecture 4

- 2024-Lecture 4

- Special cases for matrices (html) (jl) (ipynb)

- Lecture 5

- Notes for Lecture 5

- 2024-Lecture 5

- Lecture 7

- Notes for Lecture 7

- 2024-Lecture 7

- Lecture 8

- Notes for Lecture 8

- 2024-Lecture 8

- Jordan Blocks and Matrix Powers (html) (jl) (ipynb)

- Lecture 9

- Notes for Lecture 9

- 2024-Lecture 9

- Lecture 10

- Notes for Lecture 10

- 2024-Lecture 10

- Power method (html) (jl) (ipynb)

- Lecture 11

- Notes for Lecture 11

- 2024-Lecture 11

- Lecture 12

- Notes for Lecture 12

- 2024-Lecture 12

- Pivoting and rank (html) (jl) (ipynb)

- Lecture 13

- Notes for Lecture 13

- 2024-Lecture 13

- Lecture 15

- Notes for Lecture 15

- 2024-Lecture 15

- Lecture 16

- Notes for Lecture 16

- 2024-Lecture 16

- Lecture 18

- Notes for Lecture 18

- 2024-Lecture 18

- Lecture 19

- Notes for Lecture 19

- 2024-Lecture 19

- Lecture 20

- Notes for Lecture 20

- 2024-Lecture 20

-

FloatingPointErrors.jl. You can add this to Julia by doing

] add https://github.com/dgleich/FloatingPointErrors.gitthen

using FloatingPointErrors - Lecture 21

- Notes for Lecture 21

- 2024-Lecture 21

- Lecture 22

- Notes for Lecture 22

- 2024-Lecture 22

- Lecture 23

- Notes for Lecture 23

- 2024-Lecture 23

- Lecture 24

- Notes for Lecture 24

- 2024-Lecture 24

- MINRES vs. CG (html) (jl) (ipynb)

- Lecture 25

- Notes for Lecture 25

- 2024-Lecture 25

- MINRES gives A orthogonal residuals (html) (jl) (ipynb)

- MINRES with orthogonal polynomials (html) (jl) (ipynb)

- Lecture 26

- Notes for Lecture 26

- 2024-Lecture 26

- Lecture 27

- Notes for Lecture 27

- 2024-Lecture 27

- Three demos (html) (jl) (ipynb)

- Lecture 28

- Notes for Lecture 28

- 2024-Lecture 28

- Lecture 29

- Notes for Lecture 29

- 2024-Lecture 29

- Lecture 30

- Notes for Lecture 30

- 2024-Lecture 30