Books and Surveys

|

|

|

Delaunay Mesh Generation.

Siu-Wing

Cheng, Tamal K. Dey, Jonathan R. Shewchuk. Published

by CRC Press (2012 December). Details and Sample

Chapters including a new algorithm for meshing 3D polyhedra with arbitrary

small angles (chapter 9).

Order

link

|

|

|

|

|

Delaunay

mesh generation of three dimensional

domains.

T. K. Dey. Technical Report

OSU-CISRC-9/07-TR64, October, 2007.

To appear in Tessellations in the sciences:

Virtues, Techniques and Applications of Geometric Tilings,

eds. R. van de Weygaert, G. Vegter, J. Ritzerveld & V.

Icke, Springer-Verlag, 2009.

Delaunay meshes are used in various applications such

as finite element analysis, computer graphics rendering,

geometric modeling, and shape analysis. As the

applications vary, so do the domains to be meshed. Although

meshing of geometric domains with Delaunay simplices

have been around for a while, provable techniques to meshing

various types of three dimenisonal domains have been developed

only recently. We devote this article to presenting these

techniques. We survey various related results and detail a

few core algorithms that have provable guarantees and are amenable

to practical implementation. Delaunay refinement, a paradigm

originally developed for guaranteeing shape quality of mesh

elements, is a common thread in these algorithms. We finish

the article by listing a set of open questions.

|

|

|

|

|

Sample

based geometric

modeling

T. K. Dey.

Chapter in Geometric and Algorithmic

Aspects of Computer-Aided Design and Manufacturing,

eds Janardan, Smid, Dutta. DIMACS series

in Discrete Mathematics and Theoretical Computer Science,

Volume 67, 2005.

A new paradigm for

digital modeling of physical objects from

sample points is fast emerging due to recent

advances in scanning technology. Researchers

are investigating many of the traditional

modeling problems in this new setting. We name this

new area as sample based geometric

modeling. The purpose

of this article is to expose the readers to this

new modeling paradigm through three basic problems,

namely surface reconstruction, medial axis approximation,

and shape segmentation. The algorithms for

these three problems developed by the author and his

co-authors are described. |

|

|

Curve

and surface reconstruction

T. K. Dey.

Chapter in Handbook of Discrete

and Computational Geometry, Goodman and O'

Rourke eds., CRC press, 2nd edition (2004)

New version for 3rd edition published (2016).

The problem of reconstructing

a shape from its sample appears in many

scientific and engineering applications.

Because of the variety in shapes and applications,

many algorithms have been proposed over

the last two decades, some of which exploit

application-specific information and some

of which are more general. We will concentrate

on techniques that apply to the general setting and have

proved to provide some guarantees on the quality

of reconstruction. |

|

|

Papers

|

Limit computation over posets via minimal initial functors Limit computation over posets via minimal initial functors

T. K. Dey, M. Lesnick. https://arxiv.org/abs/2601.00209(2026)

It is

well known that limits can be computed by restricting along an initial

functor, and that this can often simplify limit computation. We

systematically study the algorithmic implications of this idea for

diagrams indexed by a finite poset. We say an initial functor      F:C->D with F:C->D with  C small is \emph{minimal} if the sets of objects and morphisms of C small is \emph{minimal} if the sets of objects and morphisms of  C each have minimum cardinality, among the sources of all initial functors with target C each have minimum cardinality, among the sources of all initial functors with target  D. For Q D. For Q a finite poset or a finite poset or     Q\subseteq N^d an interval (i.e., a convex, connected subposet), we describe all minimal initial functors Q\subseteq N^d an interval (i.e., a convex, connected subposet), we describe all minimal initial functors      F:P->Q and in particular, show that F:P->Q and in particular, show that  F is always a poset inclusion. We give efficient algorithms to compute a choice of minimal initial functor. In the case that F is always a poset inclusion. We give efficient algorithms to compute a choice of minimal initial functor. In the case that     Q\subseteq N^d is an interval, we give asymptotically optimal bounds on |P| Q\subseteq N^d is an interval, we give asymptotically optimal bounds on |P|   , the number of relations in , the number of relations in  P (including identities), in terms of the number n P (including identities), in terms of the number n of minima of Q of minima of Q : We show that : We show that         |P|=\Theta(n) for |P|=\Theta(n) for    d\leq 3, and d\leq 3, and          \Theta(n^2) for d>3 \Theta(n^2) for d>3   . We apply these results to give new bounds on the cost of computing lim G . We apply these results to give new bounds on the cost of computing lim G    for a functor for a functor        G:Q->Vec valued in vector spaces. For G:Q->Vec valued in vector spaces. For  Q connected, we also give new bounds on the cost of computing the \emph{generalized rank} of G Q connected, we also give new bounds on the cost of computing the \emph{generalized rank} of G (i.e., the rank of the induced map lim G->colim G (i.e., the rank of the induced map lim G->colim G            ), which is of interest in topological data analysis. ), which is of interest in topological data analysis.

|

|

|

Fast free resolutions of bifiltered chain complexes Fast free resolutions of bifiltered chain complexes

U. Bauer, T. K. Dey, M. Kerber, F. Russo, and M. Sols. to appear Proc. 42nd Internat. Sympos. Comput. Geom. (SoCG 2026), https://arxiv.org/abs/2512.08652

In a  k-critical bifiltration, every simplex enters along a staircase with at most k-critical bifiltration, every simplex enters along a staircase with at most  k steps. Examples with k steps. Examples with    k>1 include degree-Rips bifiltrations and models of the multicover bifiltration. We consider the problem of converting a k>1 include degree-Rips bifiltrations and models of the multicover bifiltration. We consider the problem of converting a  k-critical bifiltration into a k-critical bifiltration into a  1-critical

(i.e. free) chain complex with equivalent homology. This is known as

computing a free resolution of the underlying chain complex and is a

first step toward post-processing such bifiltrations. We

present two algorithms. The first one computes free resolutions

corresponding to path graphs and assembles them to a chain complex by

computing additional maps. The simple combinatorial structure of path

graphs leads to good performance in practice, as demonstrated by

extensive experiments. However, its worst-case bound is quadratic in

the input size because long paths might yield dense boundary matrices

in the output. Our second algorithm replaces the simplex-wise path

graphs with ones that maintain short paths which leads to almost linear

runtime and output size. We demonstrate that pre-computing a free resolution speeds up the task of computing a minimal presentation of the homology of a 1-critical

(i.e. free) chain complex with equivalent homology. This is known as

computing a free resolution of the underlying chain complex and is a

first step toward post-processing such bifiltrations. We

present two algorithms. The first one computes free resolutions

corresponding to path graphs and assembles them to a chain complex by

computing additional maps. The simple combinatorial structure of path

graphs leads to good performance in practice, as demonstrated by

extensive experiments. However, its worst-case bound is quadratic in

the input size because long paths might yield dense boundary matrices

in the output. Our second algorithm replaces the simplex-wise path

graphs with ones that maintain short paths which leads to almost linear

runtime and output size. We demonstrate that pre-computing a free resolution speeds up the task of computing a minimal presentation of the homology of a  k-critical

bifiltration in a fixed dimension. Furthermore, our findings show that

a chain complex that is minimal in terms of generators can be

asymptotically larger than the non-minimal output complex of our second

algorithm in terms of description size. k-critical

bifiltration in a fixed dimension. Furthermore, our findings show that

a chain complex that is minimal in terms of generators can be

asymptotically larger than the non-minimal output complex of our second

algorithm in terms of description size.

|

|

|

D-Gril: End-to-end topological learning with 2-parameter persistence D-Gril: End-to-end topological learning with 2-parameter persistence

S. Mukherjee, S. N. Samaga, C. Xin, S. Oudot, T. K. Dey. to appear Proc. 42nd Internat. Sympos. Comput. Geom. (SoCG 2026), arXiv:2406.07100

End-to-end

topological learning using 1-parameter persistence is well-known. We

show that the framework can be enhanced using 2-parameter persistence

by adopting a recently introduced 2-parameter persistence based

vectorization technique called GRIL. We establish a theoretical

foundation of differentiating GRIL producing D-GRIL. We show that

D-GRIL can be used to learn a bifiltration function on standard

benchmark graph datasets. Further, we exhibit that this framework can

be applied in the context of bio-activity prediction in drug discovery

|

|

|

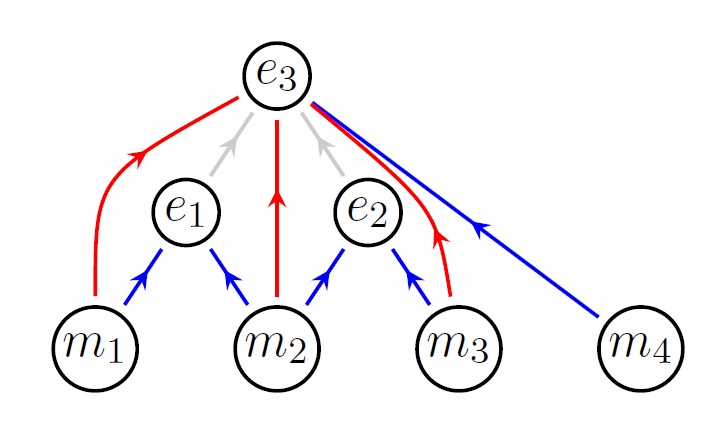

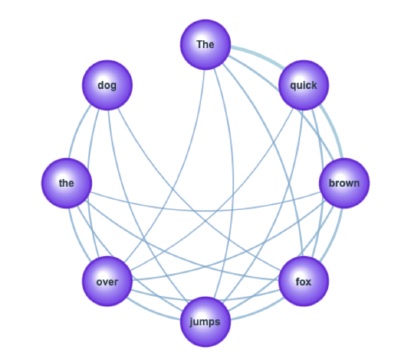

Halluzig: Hallucination detection by zigzag persistence Halluzig: Hallucination detection by zigzag persistence

S. N. Samaga, G. Gonzalez-Arryo, T. K. Dey. to appear in EACL (Oral) (2026), https://arxiv.org/abs/2601.01552 (2026)

The

factual reliability of Large Language Models (LLMs) remains a critical

barrier to their adoption in high-stakes domains due to their

propensity to hallucinate. Current detection methods often rely on

surface-level signals from the model's output, overlooking the failures

that occur within the model's internal reasoning process. In this

paper, we introduce a new paradigm for hallucination detection by

analyzing the dynamic topology of the evolution of model's layer-wise

attention. We model the sequence of attention matrices as a zigzag

graph filtration and use zigzag persistence, a tool from Topological

Data Analysis, to extract a topological signature. Our core hypothesis

is that factual and hallucinated generations exhibit distinct

topological signatures. We validate our framework, HalluZig, on

multiple benchmarks, demonstrating that it outperforms strong

baselines. Furthermore, our analysis reveals that these topological

signatures are generalizable across different models and hallucination

detection is possible only using structural signatures from partial

network depth.

|

|

|

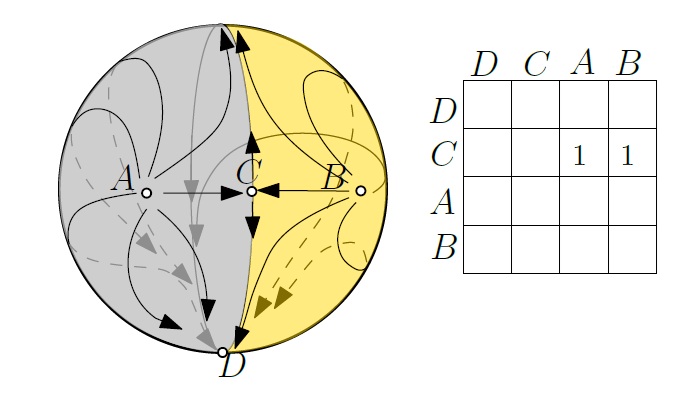

Conley-Morse persistence barcode: a homological signature of a combinatorial bifurcation Conley-Morse persistence barcode: a homological signature of a combinatorial bifurcation

T. K. Dey, M. Lipinski, M. Soriano-Trigueros. https://arxiv.org/abs/2504.17105(2025)

Bifurcation

is one of the major topics in the theory of dynamical systems. It

characterizes the nature of qualitative changes in parametrized

dynamical systems. In this work, we study combinatorial bifurcations

within the framework of combinatorial multivector field theory--a young

but already well-established theory providing a combinatorial model for

continuous-time dynamical systems (or simply, flows). We introduce

Conley-Morse persistence barcode, a compact algebraic descriptor of

combinatorial bifurcations. The barcode captures structural changes in

a dynamical system at the level of Morse decompositions and provides a

characterization of the nature of observed transitions in terms of the

Conley index. The construction of Conley-Morse persistence barcode

builds upon ideas from topological data analysis (TDA). Specifically,

we consider a persistence module obtained from a zigzag filtration of

topological pairs (formed by index pairs defining the Conley index)

over a poset. Using gentle algebras, we prove that this module

decomposes into simple intervals (bars) and compute them with

algorithms from TDA known for processing zigzag filtrations.

|

|

|

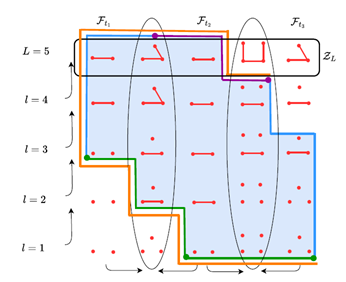

Computing Projective Implicit Representations from Poset Towers Computing Projective Implicit Representations from Poset Towers

T. K. Dey and F. Russold. https://arxiv.org/abs/2505.08755 (2025)

A family of simplicial complexes, connected with simplicial maps and indexed by a poset P ,

is called a poset tower. The concept of poset towers subsumes classical

objects of study in the persistence literature, as, for example,

one-critical multi-filtrations and zigzag filtrations, but also allows

multi-critical simplices and arbitrary simplicial maps. The homology of

a poset tower gives rise to a P ,

is called a poset tower. The concept of poset towers subsumes classical

objects of study in the persistence literature, as, for example,

one-critical multi-filtrations and zigzag filtrations, but also allows

multi-critical simplices and arbitrary simplicial maps. The homology of

a poset tower gives rise to a P -persistence module. To compute this homology globally over -persistence module. To compute this homology globally over  P, in the spirit of the persistence algorithm, we consider the homology of a chain complex of P, in the spirit of the persistence algorithm, we consider the homology of a chain complex of  P-persistence modules, C_{l-1}<--C_l<--C_{l+1} P-persistence modules, C_{l-1}<--C_l<--C_{l+1}            , induced by the simplices of the poset tower. Contrary to the case of one-critical filtrations, the chain-modules C_l , induced by the simplices of the poset tower. Contrary to the case of one-critical filtrations, the chain-modules C_l   of

a poset tower can have a complicated structure. In this work, we tackle

the problem of computing a representation of such a chain complex

segment by projective modules and of

a poset tower can have a complicated structure. In this work, we tackle

the problem of computing a representation of such a chain complex

segment by projective modules and  P-graded

matrices, which we call a projective implicit representation (PiRep).

We give efficient algorithms to compute asymptotically minimal

projective resolutions (up to the second term) of the chain modules and

the boundary maps and compute a PiRep from these resolutions. Our

algorithms are tailored to the chain complexes and resolutions coming

from poset towers and take advantage of their special structure. In the

context of poset towers, they are fully general and could potentially

serve as a foundation for developing more efficient algorithms on

specific posets. P-graded

matrices, which we call a projective implicit representation (PiRep).

We give efficient algorithms to compute asymptotically minimal

projective resolutions (up to the second term) of the chain modules and

the boundary maps and compute a PiRep from these resolutions. Our

algorithms are tailored to the chain complexes and resolutions coming

from poset towers and take advantage of their special structure. In the

context of poset towers, they are fully general and could potentially

serve as a foundation for developing more efficient algorithms on

specific posets.

|

|

|

Computing connection matrix and persistence efficiently for a Morse decomposition Computing connection matrix and persistence efficiently for a Morse decomposition

T. K. Dey, A. Haas, M. Lipinski. SIAM J. Applied Dynamical Systems, Vol. 25 (1), pp. 108-130 (2026) https://arxiv.org/abs/2502.19369 (2025)

Morse

decompositions partition the flows in a vector field into equivalent

structures. Given such a decomposition, one can define a further

summary of its flow structure by what is called a connection matrix.

These

matrices, a generalization of Morse boundary operators from classical

Morse theory, capture the connections made by the flows among the

critical structures - such as attractors, repellers, and orbits - in a

vector field. Recently, in the context of combinatorial dynamics, an

efficient persistence-like algorithm to compute connection matrices has

been proposed in~\cite{DLMS24}. We show that, actually, the classical

persistence algorithm with exhaustive reduction retrieves connection

matrices, both simplifying the algorithm of~\cite{DLMS24} and bringing

the theory of persistence closer to combinatorial dynamical systems. We

supplement this main result with an observation: the concept of

persistence as defined for scalar fields naturally adapts to Morse

decompositions whose Morse sets are filtered with a Lyapunov function.

We conclude by presenting preliminary experimental results.

|

|

|

Quasi Zigzag Persistence: A Topological Framework for Analyzing Time-Varying Data Quasi Zigzag Persistence: A Topological Framework for Analyzing Time-Varying Data

T. K. Dey, S. Samaga. Spotlight paper in TAG-DS, to appear in PMLR. ArXiv https://arxiv.org/abs/2502.16049v1. (2025)

In

this paper, we propose Quasi Zigzag Persistent Homology (QZPH) as a

framework for analyzing time-varying data by integrating multiparameter

persistence and zigzag persistence. To this end, we introduce a stable

topological invariant that captures both static and dynamic features at

different scales. We present an algorithm to compute this invariant

efficiently. We show that it enhances the machine learning models when

applied to tasks such as sleep-stage detection, demonstrating its

effectiveness in capturing the evolving patterns in time-evolving

datasets.

Talk Slides

|

|

|

Decomposing multiparameter persistence modules

T. K. Dey, J. Jendrysiak, and M. Kerber. Proc. 41st Internat. Sympos. Comput. Geom. (SoCG 2025).

Dey and Xin (J.Appl.Comput.Top., 2022) describe an algorithm to

decompose finitely presented multiparameter persistence modules using a

matrix reduction algorithm. Their algorithm only works for modules

whose generators and relations are distinctly graded. We extend their

approach to work on \emph{all} finitely presented modules and introduce

several improvements that lead to significant speed-ups in practice.

Our algorithm is FPT with respect to the maximal number of relations

with the same degree and with further optimisation we obtain an

$O(n^3)$ algorithm for interval-decomposable modules. In particular, we

can decide interval-decomposability in this time. As a by-product to

the proofs of correctness we develop a theory of parameter restriction

for persistence modules. Our algorithm is implemented as a software

library \textsc{aida} which is the first to enable the decomposition of

large inputs. We show its capabilities via extensive experimental

evaluation.

|

|

|

Apex representatives

T. K. Dey, T. Hou and D. Morozov. Proc. 41st Internat. Sympos. Comput. Geom. (SoCG 2025).

Given

a zigzag filtration, we want to find its barcode representatives, i.e.,

a compatible choice of bases for the homology groups that diagonalize

the linear maps in the zigzag. To achieve this, we convert the

input zigzag to a levelset zigzag of a real-valued function. This

function generates a Mayer--Vietoris pyramid of spaces, which generates

an infinite strip of homology groups. We call the origins of

indecomposable (diamond) summands of this strip their apexes and give

an algorithm to find representative cycles in these apexes from

ordinary persistence computation. The resulting representatives map

back to the levelset zigzag and thus yield barcode representatives for

the input zigzag. Our algorithm for lifting a $p$-dimensional cycle

from ordinary persistence to an apex representative

takes $O(p \cdot m \log m)$ time. From this we can recover zigzag

representatives in time $O(\log m + C)$, where $C$ is the size of the

output.

|

|

|

A fast algorithm for computing zigzag representatives

T. K. Dey, T. Hou and D. Morozov. Proc. ACM-SIAM Sympos. Discrete Algorithms (SODA 2025).

Zigzag filtrations of simplicial complexes generalize the usual

filtrations by allowing simplex deletions in addition to simplex

insertions. The barcodes computed from zigzag filtrations encode the

evolution of homological features. Althouhg one can locate a particular

feature at any index in the filtration using existing algorithms, the

resulting representatives may not be compatible with the zigzag: a

representative cycle at one one index may not map into a representative

cycle at its neighbor. For this, one needs to compute compatible

representative cycles along each bar in the barcode. Even thouhg it is

known that the barcode for a zigzag filtration with m insertions and

deletions can be computed in O(m^w) time, it is not known how to

compute the compatible representatuves so efficiently. For a non-zigzag

filtration, the classical matrix-based algorithm provides

representatives in O(m^3) time, which can be improved to O(m^w).

However, no known algorithm for zigzag filtrations computes the

representatives with the O(m^3) time bound. We present an O(m^2n) time

algorithm for this problem, where n\neq m is the size of the largest

complex in the filtratiom.

|

|

|

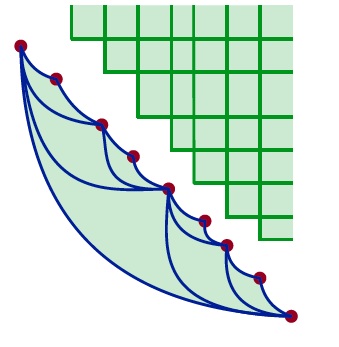

Computing generalized ranks of persistence modules via unfolding to zigzag modules

T. K. Dey, C. Xin. ArXiv arxiv.org/abs/2403.08110 (2024).

For a  P-indexed persistence module M P-indexed persistence module M , the (generalized) rank of , the (generalized) rank of  M is defined as the rank of the limit-to-colimit map for the diagram of vector spaces of M is defined as the rank of the limit-to-colimit map for the diagram of vector spaces of  M over the poset M over the poset  P. For 2 P. For 2 -parameter

persistence modules, recently a zigzag persistence based algorithm has

been proposed that takes advantage of the fact that generalized rank for 2 -parameter

persistence modules, recently a zigzag persistence based algorithm has

been proposed that takes advantage of the fact that generalized rank for 2 -parameter

modules is equal to the number of full intervals in a zigzag module

defined on the boundary of the poset. Analogous definition of boundary

for d -parameter

modules is equal to the number of full intervals in a zigzag module

defined on the boundary of the poset. Analogous definition of boundary

for d -parameter persistence modules or general -parameter persistence modules or general  P-indexed persistence modules does not seem plausible. To overcome this difficulty, we first unfold a given P P-indexed persistence modules does not seem plausible. To overcome this difficulty, we first unfold a given P -indexed module -indexed module  into a zigzag module into a zigzag module    M_ZZ and then check how many full interval modules in a decomposition of M_ZZ and then check how many full interval modules in a decomposition of    M_ZZ can be folded back to remain full in a decomposition of M M_ZZ can be folded back to remain full in a decomposition of M . This number determines the generalized rank of M . This number determines the generalized rank of M . For special cases of degree- . For special cases of degree- d homology for d d homology for d -complexes, we obtain a more efficient algorithm including a linear time algorithm for degree- -complexes, we obtain a more efficient algorithm including a linear time algorithm for degree- 1 homology in graphs. 1 homology in graphs.

|

|

|

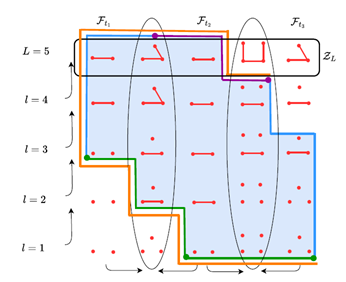

Efficient algorithms for computing complexes of persistence modules with applications

T. K. Dey, S. Samaga, F. Russold. Proc. 40th Internat. Sympos. Comput. Geom. (SoCG 2024), ArXiv preprint: arXiv:2403.10958

We

extend the persistence algorithm, viewed as an algorithm computing the

homology of a complex of free persistence or graded modules, to

complexes of modules that are not free. We replace persistence modules

by their presentations and develop an efficient algorithm to compute

the homology of a complex of presentations. To deal with inputs that

are not given in terms of presentations, we give an efficient algorithm

to compute a presentation of a morphism of persistence modules. This

allows us to compute persistent (co)homology of instances giving rise

to complexes of non-free modules. Our methods lead to a new efficient

algorithm for computing the persistent homology of simplicial towers

and they enable efficient algorithms to compute the persistent homology

of cosheaves over simplicial towers and cohomology of persistent

sheaves on simplicial complexes. We also show that we can compute the

cohomology of persistent sheaves over arbitrary finite posets by

reducing the computation to a computation over simplicial complexes.

|

|

|

Cup product persistence and its efficient computation

T. K. Dey, A. Rathod. Proc. 40th Internat. Sympos. Comput. Geom. (SoCG 2024), Arxiv preprint: arXiv:2212.01633.

It

is well-known that the cohomology ring has a richer structure than

homology groups. However, until recently, the use of cohomology in

persistence setting has been limited to speeding up of barcode

computations. Some of the recently introduced invariants, namely,

persistent cup-length, persistent cup modules, and persistent Steenrod

modules to some extent, fill this gap. When added to the standard

persistence barcode, they lead to invariants that are more

discriminative than the standard persistence barcode. In this work, we

devise an O(dn^4) algorithm for computing the persistent k-cup modules

for all $k\in\{2,\dots, d\}$, where $d$ denotes the dimension of the

filtered complex, and $n$ denotes its size. Moreover, we note that

since the persistent cup length can be obtained as a byproduct of our

computations, this leads to a faster algorithm for computing it.

Finally, we introduce a new stabble invariant called partition modules

of cup product that is more discriminative than persistent k-cup module

and devise an O(c(d)n^4) time algorithm for compututing it.

|

|

|

Computing zigzag vineyard efficiently including expansions and contractions

T. K. Dey, T. Hou. Proc. 40th Internat. Sympos. Comput. Geom. (SoCG 2024), Arxiv preprint: arXiv:2307.07462.

Vines

and vineyard connecting a stack of persistence diagrams have been

introduced in the non-zigzag setting by Cohen-Steiner et al. We

consider computing these vines over changing filtrations for zigzag

persistence while incorporating two more operations: expansions and

contractions in addition to the transpositions considered in the

non-zigzag setting. Although expansions and contractions can be

implemented in quadratic time in the non-zigzag case by utilizing the

linear-time transpositions, it is not obvious how they can be carried

out under the zigzag framework with the same complexity. While

transpositions alone can be easily conducted in linear time using the

recent FastZigzag algorithm, expansions and contractions pose

difficulty in breaking the barrier of cubic complexity. Our main result

is that, the half-way constructed up-down filtration in the FastZigzag

algorithm indeed can be used to achieve linear time complexity for

transpositions and quadratic time complexity for expansions and

contractions, matching the time complexity of all corresponding

operations in the non-zigzag case.

|

|

|

Topological structure of complex predictions

M. Liu, T. K. Dey, D. Gleich. Nature Machine Intelligence, vol. 5, 1382-1389 (2024).

Complex

prediction models such as deep learning are the output from fitting

machine learning, neural networks, or AI models to a set of training

data. These are now standard tools in science. A key challenge with the

current generation of models is that they are highly parameterized,

which makes describing and interpreting the prediction strategies

difficult. We use topological data analysis to transform these complex

prediction models into pictures representing a topological view. The

result is a map of the predictions that enables inspection. The methods

scale up to large datasets across different domains and enable us to

detect labeling errors in training data, understand generalization in

image classification, and inspect predictions of likely pathogenic

mutations in the BRCA1 gene.

|

|

|

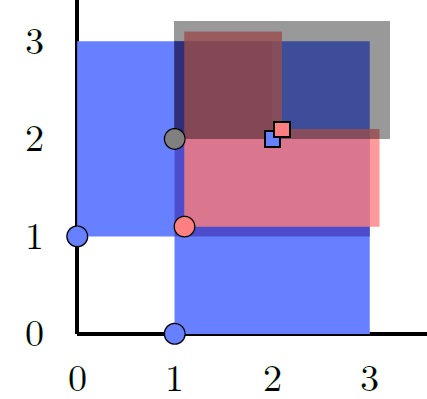

Meta-diagrams for 2-parameter persistence

N. Clause, T. K. Dey, F. Memoli, B. Wang. Proc. 39th Internat. Sympos. Comput. Geom. (SoCG 2023), Arxiv preprint: arXiv:2303.08270

We

first introduce the notion of meta-rank for a 2-parameter persistence

module, an invariant that captures the information behind images of

morphisms between 1D slices of the module. We then define the

meta-diagram of a 2-parameter persistence module to be the M\"{o}bius

inversion of the meta-rank, resulting in a function that takes values

from signed 1-parameter persistence modules. We show that the meta-rank

and meta-diagram contain information equivalent to the rank invariant

and the signed barcode. This equivalence leads to computational

benefits, as we introduce an algorithm for computing the meta-rank and

meta-diagram of a 2-parameter module $M$ indexed by a bifiltration of

$n$ simplices in $O(n^3)$ time. This implies an improvement upon the

existing algorithm for computing the signed barcode, which has $O(n^4)$

runtime. This also allows us to improve the existing upper bound on the

number of rectangles in the rank decomposition of $M$ from $O(n^4)$ to

$O(n^3)$. In addition, we define notions of erosion distance between

meta-ranks and between meta-diagrams, and show that under these

distances, meta-ranks and meta-diagrams are stable with respect to the

interleaving distance. Lastly, the meta-diagram can be visualized in an

intuitive fashion as a persistence diagram of diagrams, which

generalizes the well-understood persistence diagram in the 1-parameter

setting.

|

|

|

GRIL: A $2$-parameter persistence based vectorization for machine learning

C. Xin, S. Mukherjee, S.N. Samaga, T. K. Dey Proc. of TAGML 2023, ArXiv preprint: arXiv:2304.04970(2023)

1-parameter

persistence, a cornerstone in Topological Data Analysis (TDA),

studies the evolution of topological features such as connected

components and cycles hidden in data. It has been applied to enhance

the representation power of deep learning models, such as Graph Neural

Networks (GNNs). To enrich the representations of topological

features, here we propose to study 2-parameter persistence

modules induced by bi-filtration functions. In order to incorporate

these representations into machine learning models, we introduce a

novel vector representation called Generalized Rank Invariant Landscape

(GRIL) for 2-parameter persistence modules. We show that this

vector representation is 1-Lipschitz stable and differentiable with

respect to underlying filtration functions and can be easily integrated

into machine learning models to augment encoding topological features.

We present an algorithm to compute the vector representation

efficiently. We also test our methods on synthetic and benchmark graph

datasets, and compare the results with previous vector representations

of 1-parameter and 2-parameter persistence modules. Further, we augment

GNNs with GRIL features and observe an increase in performance

indicating that GRIL can capture additional features enriching GNNs We make the

complete code for the proposed method available

{https://github.com/soham0209/mpml-graph}

Github for software

|

|

|

Computing connection matrices via persistence-like reductions

T. K. Dey, M. Lipinski, M. Mrozek, R. Slechta, SIAM J. Applied Dynamical Systems, Vol. 23, Issue 1, pages 81-97, ArXiv preprint: arXiv:2303.02549(2023)

Connection

matrices are a generalization of Morse boundary operators from the

classical Morse theory for gradient vector fields. Developing an

efficient computational framework for connection matrices is

particularly important in the context of a rapidly growing data science

that requires new mathematical tools for discrete data. Toward this goal, the classical theory for

connection matrices has been adapted to combinatorial frameworks that

facilitate computation. We develop an efficient persistence-like

algorithm to compute a connection matrix from a given combinatorial

(multi) vector field on a simplicial complex. This algorithm requires a

single-pass, improving upon a known algorithm that runs an implicit

recursion executing two-passes at each level. Overall, the new

algorithm is more simple, direct, and efficient than the

state-of-the-art. Because of the algorithm's similarity to the

persistence algorithm, one may take advantage of various software optimizations from topological data analysis.

|

|

|

Revisiting graph persistence for updates and efficiency

T. K. Dey, T. Hou, S. Parsa. Proc. 18th Algorithms and Data Structures Symposium (WADS 2023)

ArXiv preprint: arXiv:2302.12796(2023)

It

is well known that ordinary persistence on graphs can be computed more

efficiently than the general persistence. Recently, it has been

shown that zigzag persistence on graphs also exhibits similar behavior.

Motivated by these results, we revisit graph persistence and propose

efficient algorithms especially for local updates on filtrations,

similar to what is done in ordinary persistence for computing the

vineyard. We show that, for a filtration of length $m$, (i) switches

(transpositions) in ordinary graph persistence can be done in $O(log

m)$ time; (ii) zigzag persistence on graphs can be computed in $O(m log

m)$ time, which improves a recent $O(m log^4n)$ time algorithm assuming

$n$, the size of the union of all graphs in the filtration, satisfies

$n\in\Omega({m^\varepsilon})$ for any fixed $0<\varepsilon<1$;

(iii) open-closed, closed-open, and closed-closed bars in dimension $0$

for graph zigzag persistence can be updated in $O(log m)$ time, whereas

the open-open bars in dimension $0$ and closed-closed bars in dimension

$1$ can be done in $O(\sqrt{m} log m)$ time.

|

|

|

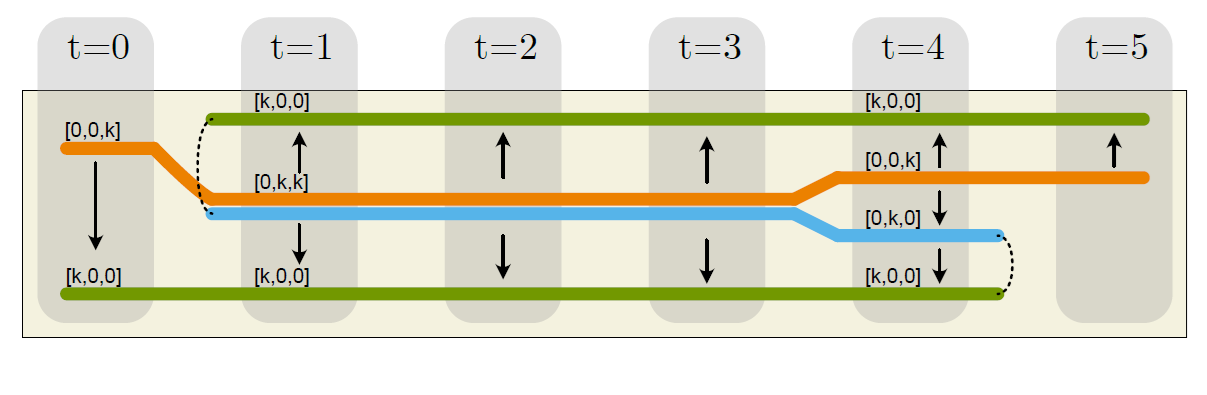

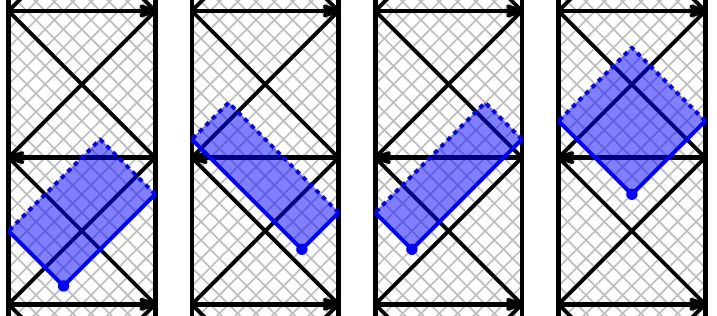

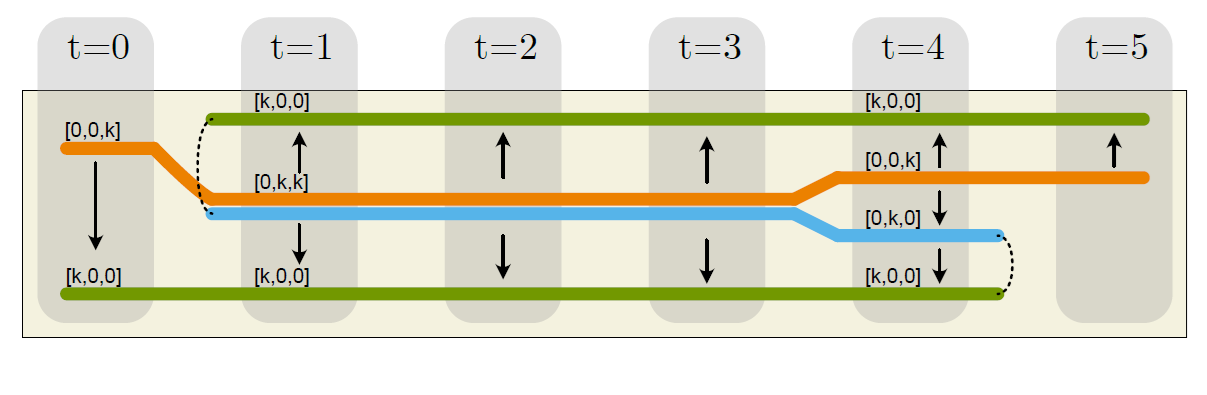

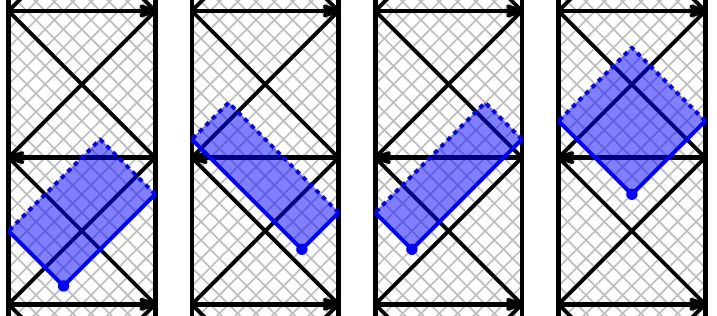

Fast computation of zigzag persistence

T. K. Dey, T. Hou. Proc. 30th European Symposium on Algorithms (ESA 2022), Vol. 244 of LIPIcs, pages 43:1--43:15. ArXiv preprint: arXiv:2204.11080(2022)

[Slides]

Zigzag

persistence is a powerful extension of the standard persistence which

allows deletions of simplices besides insertions. However, computing

zigzag persistence usually takes considerably more time than the

standard persistence. We propose an algorithm called FastZigzag which

narrows this efficiency gap. Our main result is that an input

simplex-wise zigzag filtration can be converted to a cell-wise

non-zigzag filtration of a Δ-complex

with the same length, where the cells are copies of the input

simplices. This conversion step in FastZigzag incurs very little cost.

Furthermore, the barcode of the original filtration can be easily read

from the barcode of the new cell-wise filtration because the conversion

embodies a series of diamond switches known in topological data

analysis. This seemingly simple observation opens up the vast

possibilities for improving the computation of zigzag persistence

because any efficient algorithm/software for standard persistence can

now be applied to computing zigzag persistence. Our experiment shows

that this indeed achieves substantial performance gain over the

existing state-of-the-art softwares.

[Software Download] [AATRN Talk]

This paper originated from: On association between absolute and relative zigzag persistence, ArXiv preprint: arXiv:2110.06315 (2021)

|

|

|

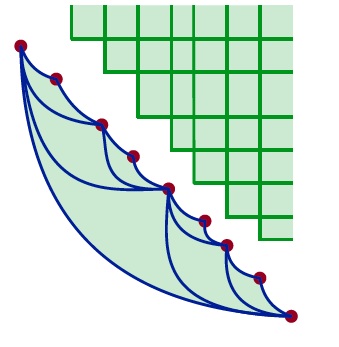

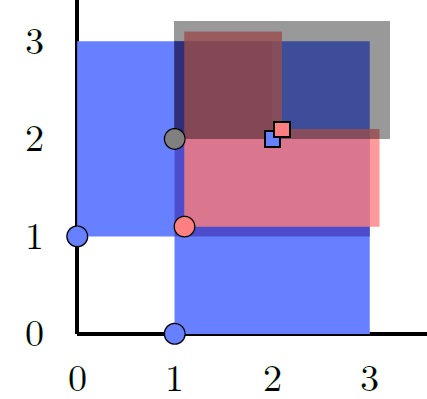

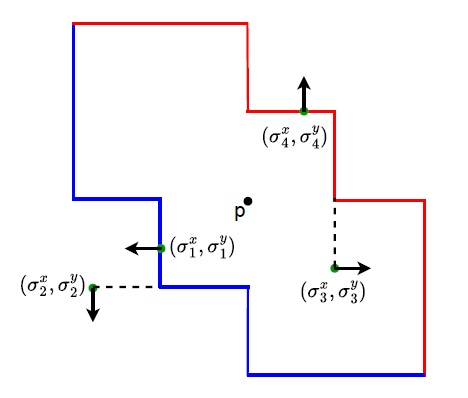

Computing generalized rank invariant for 2-parameter persistence modules via zigzag persistence and its applications

T. K. Dey, W. Kim, and F. Memoli. Proc. 38th Internat. Sympos. Comput. Geom. (SoCG 2022), Vol. 224 of LIPIcs, pages 34:1--34:17, ArXiv preprint: arXiv:2111.15058(2021)

Talk Slides

The

notion of generalized rank invariant in the context of multiparameter

persistence has become an important ingredient for defining interesting

homological structures such as generalized persistence diagrams.

Naturally, computing these rank invariants efficiently is a prelude to

computing any of these derived structures efficiently. We show that the

generalized rank over a finite interval $I$ of a $Z^2$-indexed

persistence module $M$ is equal to the generalized rank of the zigzag

module that is induced on a certain path in $I$ tracing mostly its

boundary. Hence, we can compute the generalized rank over $I$ by

computing the barcode of the zigzag module obtained by restricting the

bifiltration inducing $M$ to that path. If $I$ has $t$ points, this

computation takes $O(t^\omega)$ time where $\omega\in[2,2.373)$ is the

exponent of matrix multiplication. Among others, we apply this result

to obtain an improved algorithm for the following problem. Given a

bifiltration inducing a module $M$, determine whether $M$ is interval

decomposable and, if so, compute all intervals supporting its summands.

Our algorithm runs in time $O(t^{2\omega})$ vastly

improving upon existing algorithms for this problem.

|

|

|

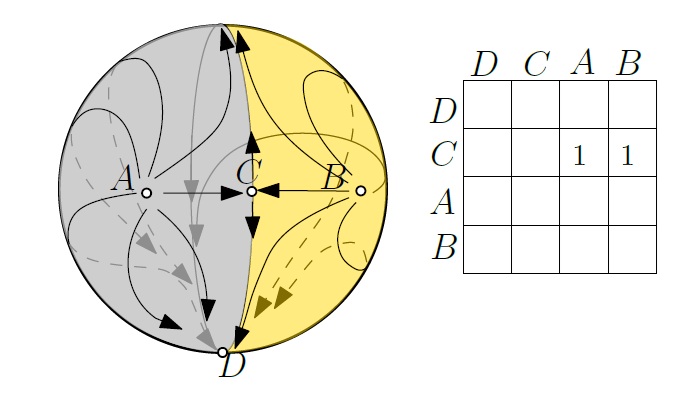

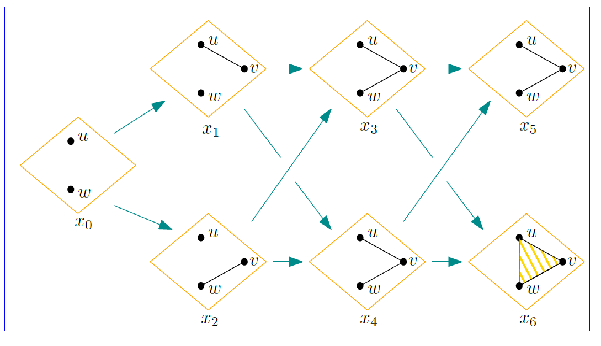

Tracking dynamical features via continuation and persistence

T. K. Dey, M. Lipinsky, M. Mrozek, and R. Slechta. Proc.

38th Internat. Sympos. Comput. Geom. (SoCG 2022), Vol. 224 LIPIcs,

pages 35:1--35:17, ArXiv preprint: https://arxiv.org/abs/2203.05727

Multivector

fields and combinatorial dynamical systems have recently become a

subject of interest due to their potential for use in computational

methods. In this paper, we develop a method to track an isolated

invariant set---a salient feature of a combinatorial dynamical

system---across a sequence of multivector fields. This goal is attained

by placing the classical notion of the ``continuation'' of an isolated

invariant set in the combinatorial setting. In particular, we give a

``Tracking Protocol'' that, when given a seed isolated invariant set,

finds a canonical continuation of the seed across a sequence of

multivector fields. In cases where it is not possible to continue, we

show how to use zigzag persistence to track homological features

associated with the isolated invariant sets. This construction permits

viewing continuation as a special case of persistence.

|

|

|

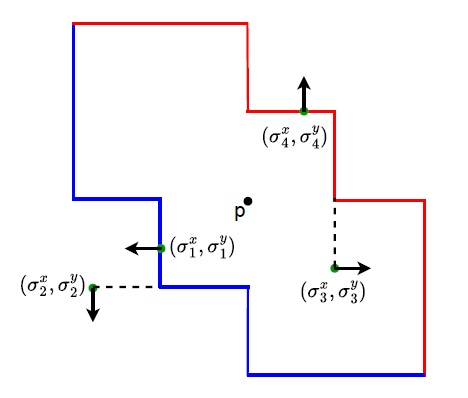

Updating barcodes and representatives for zigzag persistence

T. K. Dey, and T. Hou, ArXiv preprint: arXiv:2112.02352(2021)

Computing

persistence over changing filtrations give rise to a stack of 2D

persistence diagrams where the birth-death points are connected by the

so-called `vines'. We consider computing these vines over changing

filtrations for zigzag persistence. We observe that eight atomic

operations are sufficient for changing one zigzag filtration to another

and provide update algorithms for each of them. Six of these operations

that have some analogues to one or multiple transpositions in the

non-zigzag case can be executed as efficiently as their non-zigzag

counterparts. This approach takes advantage of a recently discovered

algorithm for computing zigzag barcodes by converting a zigzag

filtration to a non-zigzag one and then connecting barcodes of the two

with a bijection. The remaining two atomic operations do not have a

strict analogue in the non-zigzag case. For them, we propose algorithms

based on explicit maintenance of representatives (homology cycles)

which can be useful in their own rights for applications requiring

explicit updates of representatives.

|

|

|

On association between absolute and relative zigzag persistence

T. K. Dey, and T. Hou, ArXiv preprint: arXiv:2110.06315 (2021)

Duality

results connecting persistence modules for absolute and relative

homology provides a fundamental understanding into persistence theory.

In this paper, we study similar associations in the context of zigzag

persistence. Our main finding is a weak duality for the so-called

non-repetitive zigzag filtrations in which a simplex is never added

again after being deleted. The technique used to prove the duality for

non-zigzag persistence does not extend straightforwardly to our case.

Accordingly, taking a different route, we prove the weak duality by

converting a non-repetitive filtration to an up-down filtration by a

sequence of diamond switches. We then show an application of the weak

duality result which gives a near-linear algorithm for computing the pp-th and a subset of the (p−1)(p-1)-th persistence for a non-repetitive zigzag filtration of a simplicial pp-manifold.

Utilizing the fact that a non-repetitive filtration admits an up-down

filtration as its canonical form, we further reduce the problem of

computing zigzag persistence for non-repetitive filtrations to the

problem of computing standard persistence for which several efficient

implementations exist. Our experiment shows that this achieves

substantial performance gain. Our study also identifies repetitive

filtrations as instances that fundamentally distinguish zigzag

persistence from the standard persistence.

|

|

|

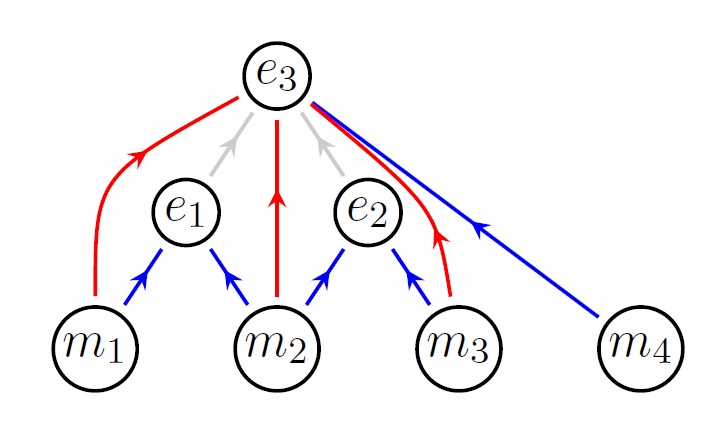

Persistence of Conley-Morse graphs in combinatorial dynamical systems

T. K. Dey, M. Mrozek, and R. Slechta, SIAM J. Applied Dynamical Systems, Vol. 21, Iss. 2, pages 817--839, 2022. Arxiv preprint: https://arxiv.org/abs/2107.02115(2021)

Multivector

fields provide an avenue for studying continuous dynamical systems in a

combinatorial framework. There are currently two approaches in the

literature which use persistent homology to capture changes in

combinatorial dynamical systems. The first captures changes in the

Conley index, while the second captures changes in the Morse

decomposition. However, such approaches have limitations. The former

approach only describes how the Conley index changes across a selected

isolated invariant set though the dynamics can be much more complicated

than the behavior of a single isolated invariant set. Likewise,

considering a Morse decomposition omits much information about the

individual Morse sets. In this paper, we propose a method to summarize

changes in combinatorial dynamical systems by capturing changes in the

so-called Conley-Morse graphs. A Conley-Morse graph contains

information about both the structure of a selected Morse decomposition

and about the Conley index at each Morse set in the decomposition.

Hence, our method summarizes the changing structure of a sequence of

dynamical systems at a finer granularity than previous approaches.

|

|

|

Topological filtering for 3D microstructure segmentation

A. V. Patel, T. Hou, J. D. B. Rodriguez, T. K. Dey, and D. P. Birnie III, Computational Materials Science, 202:110920, 2022.

Tomography

is a widely used tool for analyzing microstructures in three dimensions

(3D). The analysis, however, faces difficulty because the constituent

materials produce similar grey-scale values. Sometimes, this prompts

the image segmentation process to assign a pixel/voxel to the wrong

phase (active material or pore). Consequently, errors are introduced in

the microstructure characteristics calculation. In this work, we

develop a filtering algorithm called PerSplat based on topological

persistence (a technique used in topological data analysis) to improve

segmentation quality. One problem faced when evaluating filtering

algorithms is that real image data in general are not equipped with the

`ground truth' for the microstructure characteristics. For this study,

we construct synthetic images for which the ground-truth values are

known. On the synthetic images, we compare the pore tortuosity and

Minkowski functionals (volume and surface area) computed with our

PerSplat filter and other methods such as total variation (TV) and

non-local means (NL-means). Moreover, on a real 3D image, we visually

compare the segmentation results provided by our filter against TV and

NL-means. The experimental results indicate that PerSplat provides a

significant improvement in segmentation quality.

|

|

|

Appproximating 1-Wasserstein distance between persistence diagrams by graph sparsification

T.

K. Dey, S. Zhang, SIAM Sympos. Algorithm Engg. Experiments (ALENEX22), 2022.

Persistence

diagrams (PDs) play a central role in topological data analysis. This

analysis requires computing distances among such diagrams such as the

1-Wasserstein distance. Accurate computation of these PD distances for

large data sets that render large diagrams may not scale appropriately

with the existing methods. The main source of difficulty ensues from

the size of the bipartite graph on which a matching needs to be

computed for determining these PD distances. We address this problem by

making several algorithmic and computational observations in order to

obtain an approximation. First, taking advantage of the proximity of PD

points, we condense them thereby decreasing the number of nodes in the

graph for computation. The increase in point multiplicities is

addressed by reducing the matching problem to a min-cost flow problem

on a transshipment network. Second, we use Well Separated Pair

Decomposition to sparsify the graph to a size that is linear in the

number of points. Both node and arc sparsifications contribute to the

approximation factor where we leverage a lower bound given by the

Relaxed Word Mover's distance. Third, we eliminate bottlenecks during

the sparsification procedure by introducing parallelism. Fourth, we

develop an open source software called PDoptFlow based on our

algorithm, exploiting parallelism by GPU and multicore. We perform

extensive experiments and show that the actual empirical error is very

low. We also show that we can achieve high performance at low

guaranteed relative errors, improving upon the state of the arts.

|

|

|

Computing optimal persistent cycles for levelset zigzag on manifold-like complexes

T.

K. Dey, T. Hou, arXiv: https://arxiv.org/abs/2105.00518 May 2021.

In

standard persistent homology, a persistent cycle born and dying with a

persistence interval (bar) associates the bar with a concrete

topological representative, which provides means to effectively

navigate back from the barcode to the topological space. Among the

possibly many, optimal persistent cycles bring forth further

information due to having guaranteed quality. However, topological

features usually go through variations in the lifecycle of a bar which

a single persistent cycle may not capture. Hence, for persistent

homology induced from PL functions, we propose levelset persistent

cycles consisting of a sequence of cycles that depict the evolution of

homological features from birth to death. Our definition is based on

levelset zigzag persistence which involves four types of persistence

intervals as opposed to the two types in standard persistence. For each

of the four types, we present a polynomial-time algorithm computing an

optimal sequence of levelset persistent pp-cycles for the so-called weak (p+1)(p+1)-pseudomanifolds.

Given that optimal cycle problems for homology are NP-hard in general,

our results are useful in practice because weak pseudomanifolds do

appear in applications. Our algorithms draw upon an idea of relating

optimal cycles to min-cuts in a graph that we exploited earlier for

standard persistent cycles. Note that levelset zigzag poses non-trivial

challenges for the approach because a sequence of optimal cycles

instead of a single one needs to be computed in this case.

|

|

|

Computing zigzag persistence on graphs in near-linear time

T.

K. Dey, T. Hou, March 2021, Proc. 37th Internat. Sympos. Comput. Geom. (2021), Vol. 189 of LIPIcs, pages 30:1--30:15 (SoCG 2021).

Graphs model real-world circumstances in many

applications where they may constantly change to capture the dynamic

behavior of the phenomena. Topological persistence which provides a set

of birth and death pairs for the topological features is one instrument

for analyzing such changing graph data. However, standard persistent

homology defined over a growing space cannot always capture such a

dynamic process unless shrinking with deletions is also allowed. Hence,

zigzag persistence which incorporates both insertions and deletions of

simplices is more appropriate in such a setting. Unlike standard

persistence which admits nearly linear-time algorithms for graphs, such

results for the zigzag version improving the general $O(m^w)$ time

complexity are not known, where $w< 2.37286$ is the matrix

multiplication exponent. In this paper, we propose algorithms for

zigzag persistence on graphs which run in near-linear time.

Specifically, given a filtration with $m$ additions and deletions on a

graph with $n$ vertices and edges, the algorithm for $0$-dimension runs

in $O(m\log^2 n+m\log m)$ time and the algorithm for 1-dimension runs

in $O(m\log^4 n)$ time.

|

|

|

Persistence of the Conley Index in Combinatorial Dynamical Systems

T.

K. Dey, M. Mrozek, and R. Slechta, February 2020, Proc. Internat. Sympos. Comput. Geom. (2020) (SoCG 2020).

A combinatorial framework for dynamical systems provides an avenue for connecting classical

dynamics with algorithmic methods. Discrete Morse vector fields by

Forman and its recent adaptation to multivector fields by Mrozek have

laid the foundation for this combinatorial framework. In this work, we

make a further connection to computational topology by putting the well

known Conley index of (multi)vector fields into the persistence

framework. Conley indices are homological features of the so called

invariant sets in a dynamical system. We show how one can compute the

persistence of these indices over a sequence of multivector fields

sampled from an underlying dynamical system. This also enables us to

`track' features in a dynamical system in a principled way.

|

|

|

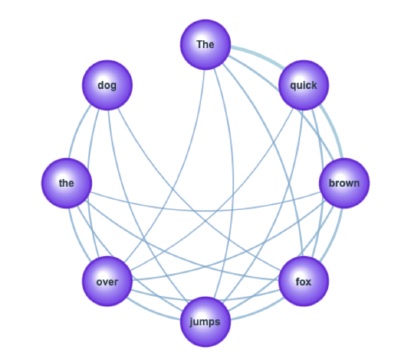

An efficient algorithm for 1-dimensional (persistent) path homology

T.

K. Dey, T. Li, and Y. Wang, January 2020, arxiv:https://arxiv.org/abs/2001.09549, Proc. Internat. Sympos. Comput. Geom. (2020) (SoCG 2020).

This paper focuses on developing an efficient algorithm for analyzing a

directed network (graph) from a topological viewpoint. A prevalent technique

for such topological analysis involves computation of homology groups and their

persistence. These concepts are well suited for spaces that are not directed.

As a result, one needs a concept of homology that accommodates orientations in

input space. Path-homology developed for directed graphs by Grigor'yan, Lin,

Muranov and Yau has been effectively adapted for this purpose recently by

Chowdhury and Mémoli. They also give an algorithm to compute this

path-homology. Our main contribution in this paper is an algorithm that

computes this path-homology and its persistence more efficiently for the

11-dimensional (H_1H1) case. In developing such an algorithm, we discover

various structures and their efficient computations that aid computing the

1-dimensional path-homnology. We implement our algorithm and present some

preliminary experimental results.

|

|

|

Road Network reconstruction from Satellite Images with Machine Learning Supported by Topological Methods

T.

K. Dey, J. Wang, and Y. Wang, September 2019, arxiv:https://arxiv.org/pdf/1909.06728.pdf A shoter version to appear in SIGSPATIAL 2019.

Automatic

Extraction of road network from satellite images is a goal that can

benefit and even enable new technologies. Methods that combine machine

learning (ML) and computer vision have been proposed in recent years

which make the task semi-automatic by requiring the user to provide

curated training samples. The process can be fully automatized if

training samples can be produced algorithmically. Of course, this

requires a robust algorithm that can reconstruct the road networks from

satellite images reliably so that the output can be fed as training

samples. In this work, we develop such a technique by infusing a

persistence-guided discrete Morse based graph reconstruction algorithm

into ML framework.

We

elucidate our contributions in two phases. First, in a semi-automatic

framework, we combine a discrete-Morse based graph reconstruction

algorithm with an existing CNN framework to segment input satellite

images. We show that this leads to reconstructions with better

connectivity and less noise. Next, in a fully automatic framework, we

leverage the power of the discrete-Morse based graph reconstruction

algorithm to train a CNN from a collection of images without labelled

data and use the same algorithm to produce the final output from the

segmented images created by the trained CNN. We apply the

discrete-Morse based graph reconstruction algorithm iteratively to

improve the accuracy of the CNN. We show promising experimental results

of this new framework on datasets from SpaceNet Challenge.

|

|

|

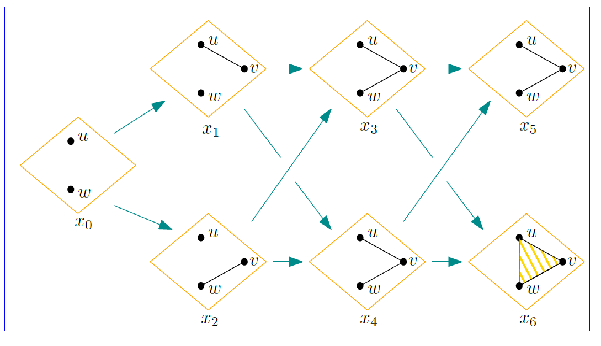

Generalized Persistence Algorithm for Decomposing Multi-parameter Persistence Modules

T.

K. Dey and Cheng Xin, J. Applied and Computational Topology (JACT), 2022. ArXiv version https://arxiv.org/abs/1904.03766.

Link to Journal version

The

classical persistence algorithm computes the unique decomposition of a

persistence module implicitly given by an input simplicial filtration.

Based on matrix reduction, this algorithm is a cornerstone of the

emergent area of topological data analysis. Its input is a simplicial

filtration defined over the integers $\mathbb{Z}$ giving rise to a

$1$-parameter persistence module. It has been recognized that

multiparameter version of persistence modules given by simplicial

filtrations over $d$-dimensional integer grids $\mathbb{Z}^d$ is

equally or perhaps more important in data science applications.

However, in the multiparameter setting, one of the main challenges is

that topological summaries based on algebraic structure such as

decompositions and bottleneck distances cannot be as efficiently

computed as in the $1$-parameter case because there is no known

extension of the persistence algorithm to multiparameter persistence

modules. We present an efficient algorithm to compute the unique

decomposition of a finitely presented persistence module $M$ defined

over the multiparameter $\mathbb{Z}^d$. The algorithm first assumes

that the module is presented with a set of $N$ generators and relations

that are \emph{distinctly graded}. Based on a generalized matrix

reduction technique it runs in $O(N^{2\omega+1})$ time where

$\omega<2.373$ is the exponent of matrix multiplication. This is

much better than the well known algorithm called Meataxe which runs in

$\tilde{O}(N^{6(d+1)})$ time on such an input. In practice, persistence

modules are usually induced by simplicial filtrations. With such an

input consisting of $n$ simplices, our algorithm runs in

$O(n^{(d-1)(2\omega + 1)})$ time for $d\geq 2$. For the special case of

zero dimensional homology, it runs in time $O(n^{2\omega +1})$.

|

|

|

Computing Minimal Persistent Cycles: Polynomial and Hard Cases

T.

K. Dey, T. Hou, and S. Mandal. Proceedings ACM-SIAM Sympos. Discrete Algorithms (SODA 20).

July 2019, arxiv: https://arxiv.org/abs/1907.04889

Persistent cycles, especially the minimal ones, are useful geometric features

functioning as augmentations for the intervals in the purely topological

persistence diagrams (also termed as barcodes). In our earlier work, we showed

that computing minimal 1-dimensional persistent cycles (persistent 1-cycles)

for finite intervals is NP-hard while the same for infinite intervals is

polynomially tractable. In this paper, we address this problem for general

dimensions with Z2 coefficients. In addition to proving that it is NP-hard

to compute minimal persistent d-cycles (d>1) for both types of intervals given

arbitrary simplicial complexes, we identify two interesting cases which are

polynomially tractable. These two cases assume the complex to be a certain

generalization of manifolds which we term as weak pseudomanifolds. For finite

intervals from the d-th persistence diagram of a weak (d+1)-pseudomanifold, we

utilize the fact that persistent cycles of such intervals are null-homologous

and reduce the problem to a minimal cut problem. Since the same problem for

infinite intervals is NP-hard, we further assume the weak (d+1)-pseudomanifold

to be embedded in R^{d+1}Rd+1 so that the complex has a natural dual

graph structure and the problem reduces to a minimal cut problem. Experiments

with both algorithms on scientific data indicate that the minimal persistent

cycles capture various significant features of the data.

|

|

|

Petsistent 1-Cycles: Definition, Computation, and Its Application

T.

K. Dey , T. Hou, and S. Mandal . Proceedings Computational Topology in Image Context (CTIC 2019), LNCS, Vol. 11382, pages 123--136.

arxiv version: https://arxiv.org/abs/1810.04807

See this web-page for software etc.: http://web.cse.ohio-state.edu/~dey.8/PersLoop/

Persistence

diagrams, which summarize the birth and death of homological features

extracted from data, are employed as stable signatures for applications

in image analysis and other areas. Besides simply considering the

multiset of intervals included in a persistence diagram, some

applications need to find representative cycles for the intervals. In

this paper, we address the problem of computing these representative

cycles, termed as persistent $1$-cycles.

The definition of persistent cycles is based on the interval module

decomposition of persistence modules, which reveals the structure of

persistent homology. After showing that the computation of the optimal

persistent $1$-cycles is NP-hard, we propose an alternative set

of meaningful persistent

$1$-cycles that can be computed with an efficient polynomial time

algorithm. We also inspect the stability issues of the optimal

persistent $1$-cycles and the persistent $1$-cycles computed by our

algorithm with the observation that the perturbations of both cannot be

properly bounded. We design a software which applies our algorithm to

various datasets. Experiments on 3D point clouds, mineral structures,

and images show the effectiveness of our algorithm in practice.

|

|

|

Filtration simplification for persistent homology via edge contraction

T.

K. Dey , R. Slechta. Internat. Conf. Discrete Geoem. for Comput. Imagery (DGCI 2019), pages 89--100 .

arxiv version: https://arxiv.org/abs/1810.04388

See this web-page for software: https://github.com/rslechta/pers-contract/

Persistent

homology is a popular data analysis technique that is used to capture

the changing topology of a filtration associated with some simplicial

complex $K$. These topological changes are summarized in persistence

diagrams. We propose two contraction operators which when applied to

$K$ and its associated filtration, bound the perturbation in the

persistence diagrams. The first assumes that the underlying space of

$K$ is a $2$-manifold and ensures that simplices are paired with the

same simplices in the contracted complex as they are in the original.

The second is for arbitrary $d$-complexes, and bounds the bottleneck

distance between the initial and contracted $p$-dimensional persistence

diagrams. This is accomplished by defining interleaving maps between

persistence modules which arise from chain maps defined over the

filtrations. In addition, we show how the second operator can

efficiently compose across multiple contractions. We conclude with

experiments demonstrating the second operator's utility on manifolds.

|

|

|

Computing height persistence and homology generators in R^3 efficiently

T.

K. Dey , Proc. 30th ACM-SIAM Sympos. Discrete Algorithms, 2019 (SODA 19), pages 2649--2662.

arxiv version: https://arxiv.org/abs/1807.03655

Talk Slides

Recently

it has been shown that computing the dimension of the first

homology group $H_1(K)$ of a simplicial $2$-complex $K$ embedded

linearly in $R^4$ is as hard as computing the rank of a sparse

$0-1$ matrix. This puts a major roadblock to computing

persistence and a homology basis (generators) for complexes

embedded in $R^4$ and beyond in less than quadratic or even

near-quadratic time. But, what about dimension three? It is known

that persistence for piecewise linear functions on a complex $K$ with

$n$ simplices can be computed in $O(n\log n)$ time and a set of

generators of total size $k$ can be computed in $O(n+k)$ time when $K$

is a graph or a surface linearly embedded in $R^3$. But, the

question for general simplicial complexes $K$ linearly embedded

in $R^3$ is not completely settled. No algorithm with a complexity

better than that of the matrix multiplication is known for

this important case. We show that the persistence for {\em height

functions} on such complexes, hence called {\em height

persistence}, can be computed in $O(n\log n)$ time. This

allows us to compute a basis (generators) of $H_i(K)$, $i=1,2$, in

$O(n\log n+k)$ time where $k$ is the size of the output. This improves

significantly the current best bound of $O(n^{\omega})$, $\omega$ being

the matrix multiplication exponent. We achieve these improved

bounds by leveraging recent results on zigzag persistence in

computational topology, new observations about Reeb graphs, and some

efficient geometric data structures.

|

|

|

Edge contraction in persistence-generated discrete Morse vector fields

T.

K. Dey and R. Slechta. Proc. SMI 2018, Computers & Graphics, .Vol. 74, 33-43.

Journal version: https://doi.org/10.1016/j.cag.2018.05.002

Recently,

discrete Morse vector fields have been shown to be useful in various

applications. Analogous to the simplification of large meshes using

edge contractions, one may want to simplify the cell complex $K$ on

which a discrete Morse vector field $V(K)$ is defined. To this end, we

define a gradient aware edge contraction operator for triangulated

$2$-manifolds with the following guarantee. If $V(K)$ was generated by

a specific persistence-based method, then the vector field that results

from our contraction operator is exactly the same as the vector field

produced by applying the same persistence-based method to the

contracted complex. An implication of this result is that local

operations on $V(K)$ are sufficient to produce the persistence-based

vector field on the contracted complex. Furthermore, our experiments

show that the structure of the vector field is largely preserved by our

operator. For example, $1$-unstable manifolds remain largely unaffected

by the contraction. This suggests that for some applications of

discrete Morse theory, it is sufficient to use a contracted complex.

Also, see our recent work on Discrete Morse based reconstruction here and here

|

|

|

Persistent homology of Morse decompositions in combinatorial dynamics

T.

K. Dey, M. Juda, T. Kapela, J. Kubica, M. Lipinski, M. Mrozek. https://arxiv.org/abs/1801.06590. 2018. SIAM J. on Applied Dynamical System, Vol. 18, Issue 1, 510--530, 2019.

We investigate combinatorial dynamical systems on simplicial complexes considered as finite topological spaces.

Such systems arise in a natural way from sampling dynamics and may be

used to reconstruct some features of the dynamics directly from

the sample. We study the homological persistence of Morse decompositions

of such systems, an important descriptor of the dynamics, as a

tool for validating the reconstruction. Our framework can be viewed as

a step toward extending the classical persistence theory to ``vector

cloud" data. We present experimental results on two numerical

examples.

|

|

|

Here is the n-D version of the SoCG paper.

Computing Bottelneck Distance for Multi-parameter Interval Decomposable Persistence Modules

Preprint, September, 2019.

Computing Bottleneck Distance for 2-D Interval Decomposable Modules

T.

K. Dey, C. Xin. Proc. 34th Internat. Sympos. Comput. Geoem., 32:1--32:15 (SoCG 2018).

Computation

of the interleaving distance between persistence modules is a central

task in topological data analysis. For $1$-D persistence modules,

thanks to the isometry theorem, this can be done by computing the

bottleneck distance with known efficient algorithms. The question is

open for most $n$-D persistence modules, $n>1$, because of the well

recognized complications of the indecomposables. Here, we consider a

reasonably complicated class called {\em $2$-D interval decomposable}

modules whose indecomposables may have a description of non-constant

complexity. We present a polynomial time algorithm to compute the

bottleneck distance for these modules from indecomposables, which

bounds the interleaving distance from above, and give another algorithm

to compute a new distance called {\em dimension distance} that bounds

it from below.

|

|

|

Graph Reconstruction by Discrete Morse Theory

T.

K. Dey, J. Wang and Y. Wang. Proc. Internat. Sympos. Comput. Geom., 31:1--31:15 (SoCG 2018).

Recovering hidden graph-like structures from potentially noisy data is a

fundamental task in modern data analysis. Recently, a persistence-guided

discrete Morse-based framework to extract a geometric graph from

low-dimensional data has become popular. However, to date, there is very

limited theoretical understanding of this framework in terms of graph

reconstruction. This paper makes a first step towards closing this gap.

Specifically, first, leveraging existing theoretical understanding of

persistence-guided discrete Morse cancellation, we provide a simplified version

of the existing discrete Morse-based graph reconstruction algorithm. We then

introduce a simple and natural noise model and show that the aforementioned

framework can correctly reconstruct a graph under this noise model, in the

sense that it has the same loop structure as the hidden ground-truth graph, and

is also geometrically close. We also provide some experimental results for our

simplified graph-reconstruction algorithm.

Also see this paper in SIGSPATIAL (2017).

Improved Road Network Reconstruction Using Discrete Morse Theory. T. K. Dey, J. Wang, and Y. Wang. Proc. SIGSPATIAL 2017.

|

|

|

Application of Topological Data Analysis in Machine Learning for Image and Protein Classification

|

Protein Classification with Improved Topological Data Analysis

T. K. Dey and S. Mandal. Proc. Workshop on Algorithms in Bioinformatics (WABI 2018), 6:1--6:13. DOI 10.4230/LIPIcs.WABI.2018.6

Web-page: http://web.cse.ohio-state.edu/~dey.8/proteinTDA/

Automated annotation and analysis of protein molecules have long been a topic of interest due to

immediate applications in medicine and drug design. In this work, we propose a topology based,

fast, scalable, and parameter-free technique to generate protein signatures.

We build an initial simplicial complex using information about the protein’s constituent atoms,

including radius and existing chemical bonds, to model the hierarchical structure of the molecule.

Simplicial collapse is used to construct a filtration which we use to compute persistent homology.

This information constitutes our signature for the protein. In addition, we demonstrate that this

technique scales well to large proteins. Our method shows sizable time and memory improvements

compared to other topology based approaches. We use the signature to train a protein domain

classifier. Finally, we compare this classifier against models built from state-of-the-art structure-

based protein signatures on standard datasets to achieve a substantial improvement in accuracy.

Improved Image Classification using Topological Persistence

T.

K. Dey, S. Mandal, W. Varcho. Proc. Vision Modeling and Visualization., (VMV 2017). http://dx.doi.org/10.2312/vmv.20171272

Web-page: http://web.cse.ohio-state.edu/~dey.8/imagePers/

Image classification has been a topic of interest for many years. With

the advent of Deep Learning, impressive progress has been made on the

task, resulting in quite accurate classification. Our work focuses on

improving modern image classification techniques by considering

topological features as well. We show that incorporating this

information allows our models to improve the accuracy, precision and

recall on test data, thus providing evidence that topological signatures

can be leveraged for enhancing some of the state-of-the art

applications in computer vision.

|

|

|

Efficient Algorithms for Computing a Minimal Homology Basis

T.

K. Dey, T. Li, and Y. Wang. Proc. LATIN 2018: Theoretical Informatics, LNCS, Vol. 10807, 376--398 (LATIN 2018).

Efficient

computation of shortest cycles which form a homology basis

under Z2-additions in a given simplicial complex K has been

researched actively in recent years. When the complex K is a weighted

graph with n vertices and m edges, the problem of computing a

shortest (homology) cycle basis is known to be solvable in $O(m^2n/\log

n+ n^2m)$-time. Several works [1,2] have addressed the case when the

complex K is a 2-manifold. The complexity of these algorithms

depends on the rank g of the one-dimensional homology group of K.

This rank g has a lower bound of $\Theta(n)$, where n denotes the

number of simplices in K giving an $O(n^4)$ worst-case time complexity

for the algorithms in [1,2]. This worst-case complexity is improved in

[3] to $O(n^\omega+n^2g^{\omega-1})$ for general simplicial complexes

where $\omega< 2.3728639$ [4] is the matrix multiplication exponent.

Taking $g=\Theta(n)$, this provides an $O(n^{\omega+1})$ worst-case

algorithm. In this paper, we improve this time complexity.

Combining the divide and conquer technique from [5] with the use

of annotations from [3], we present an algorithm that runs in

$O(n^\omega+n^2g)$ time giving the first $O(n^3)$ worst-case algorithm

for general complexes. If instead of minimal basis, we settle for

approximate basis, we can improve the running time even further. We

show that a 2-approximate minimal homology basis can be computed

in $O(n^{\omega}\sqrt{n \log n})$ expected time. We also study more

general measures for defining the minimal basis and identify reasonable

conditions on these measures that allow computing a minimal basis

efficiently.

|

|

|

Temporal Hierarchical Clustering

T.

K. Dey, A. Rossi, and A. Sidiropoulos. Proc. 28th Internat. Symposium on Algorithms and Computation (ISAAC 2017).

https://arxiv.org/abs/1707.09904

We study hierarchical clusterings of metric spaces that change over time.

This is a natural geometric primitive for the analysis of dynamic data sets.

Specifically, we introduce and study the problem of finding a temporally

coherent sequence of hierarchical clusterings from a sequence of unlabeled

point sets. We encode the clustering objective by embedding each point set into

an ultrametric space, which naturally induces a hierarchical clustering of the

set of points. We enforce temporal coherence among the embeddings by finding

correspondences between successive pairs of ultrametric spaces which exhibit

small distortion in the Gromov-Hausdorff sense. We present both upper and lower

bounds on the approximability of the resulting optimization problems.

|

|

|

Temporal Clustering

T.

K. Dey, A. Rossi, and A. Sidiropoulos. Proc. European Symposium on Algorithms (ESA 2017).

Full Version

https://arxiv.org/abs/1704.05964

We study the problem of clustering sequences of unlabeled point

sets taken from a common metric space. Such scenarios arise naturally

in applications where a system or process is observed in distinct time

intervals, such as biological surveys and contagious disease

surveillance. In this more general setting existing algorithms for

classical (i.e.~static) clustering problems are not applicable anymore.

We propose a set of optimization problems which we collectively refer

to as \emph{temporal clustering}. The quality of a solution to a

temporal clustering instance can be quantified using three parameters:

the number of clusters $k$, the spatial clustering cost $r$, and the

maximum cluster displacement $\delta$ between consecutive time steps.

We consider spatial clustering costs which

generalize the well-studied $k$-center, discrete $k$-median, and

discrete $k$-means objectives of classical clustering problems. We

develop new algorithms that achieve trade-offs between the three

objectives $k$, $r$, and $\delta$. Our upper bounds are complemented by

inapproximability results.

|

|

|

Topological analysis of nerves, Reeb spaces, mappers, and multiscale mappers

T.

K. Dey, F. Memoli, and Y. Wang. Proc. Internat. Sympos. Comput. Geom. (2017) (SoCG 2017).

Full Version

[talk slides]

Data analysis

often concerns not only the space where data come from, but also

various types of maps attached to data. In recent years, several

related structures have been used to study maps on data, including Reeb

spaces, mappers and multiscale mappers. The construction of these

structures also relies on the so-called \emph{nerve} of a cover of the

domain.

In this paper, we aim to analyze the topological information

encoded in these structures in order to provide better understanding of

these structures and facilitate their practical usage.

More specifically, we show that the one-dimensional homology of

the nerve complex $N(\mathcal{U})$ of a path-connected cover

$\mathcal{U}$ of a domain $X$ cannot be richer than that of the domain

$X$ itself. Intuitively, this result means that no new $H_1$-homology