Purdue CS XR Lab

Purdue CS XR Lab

Our group conducts research to develop theory, algorithm, system, human-computer interaction, and application level advances in virtual, mixed, and augmented reality.

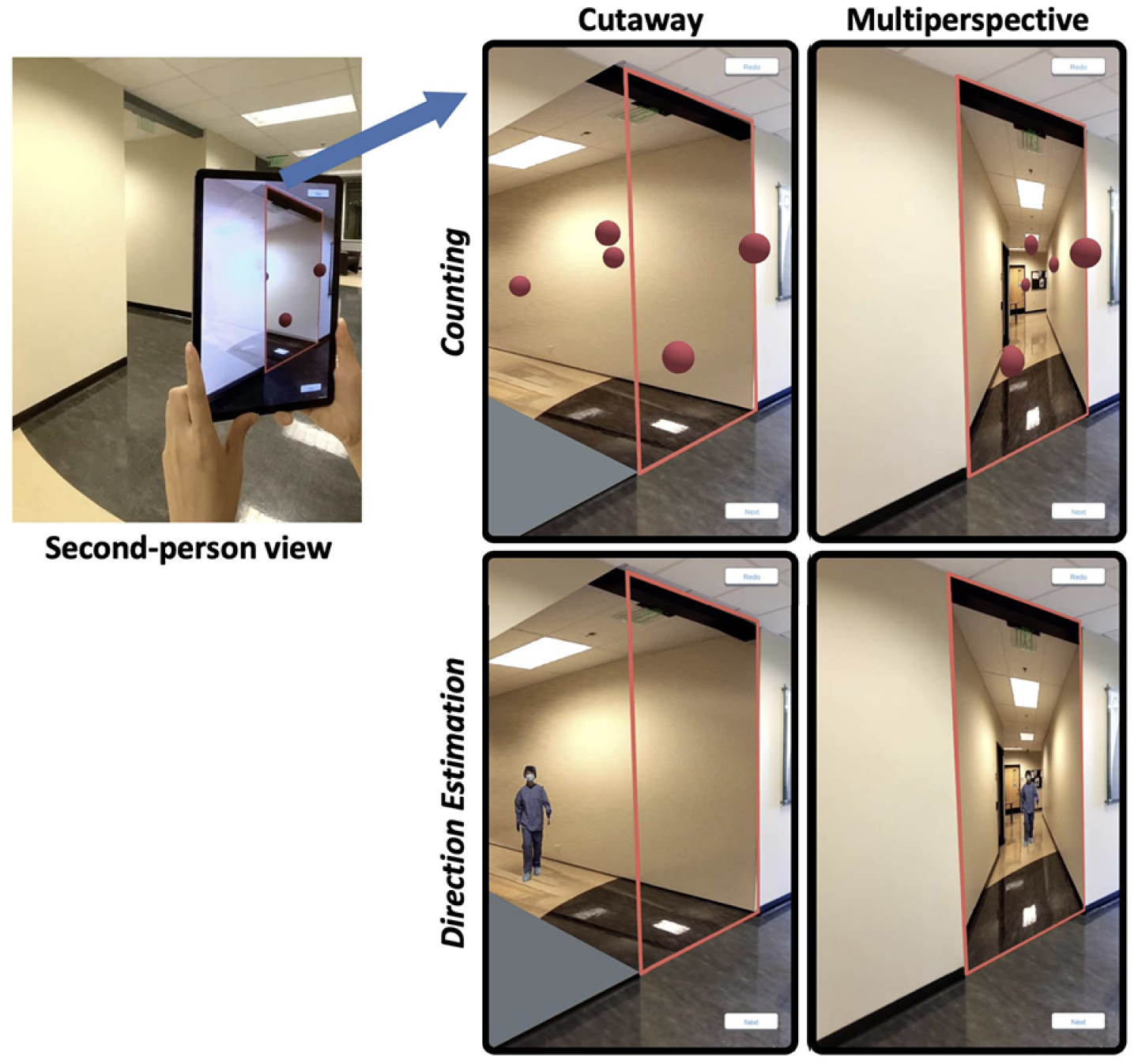

This project aims to improve virtual environment exploration efficiency by allowing the user to preview, from their current location, parts of the virtual environment to which they do not have line of sight. The preview helps avoid wasted navigation when the hidden part of the virtual environment proves to be of no interest.

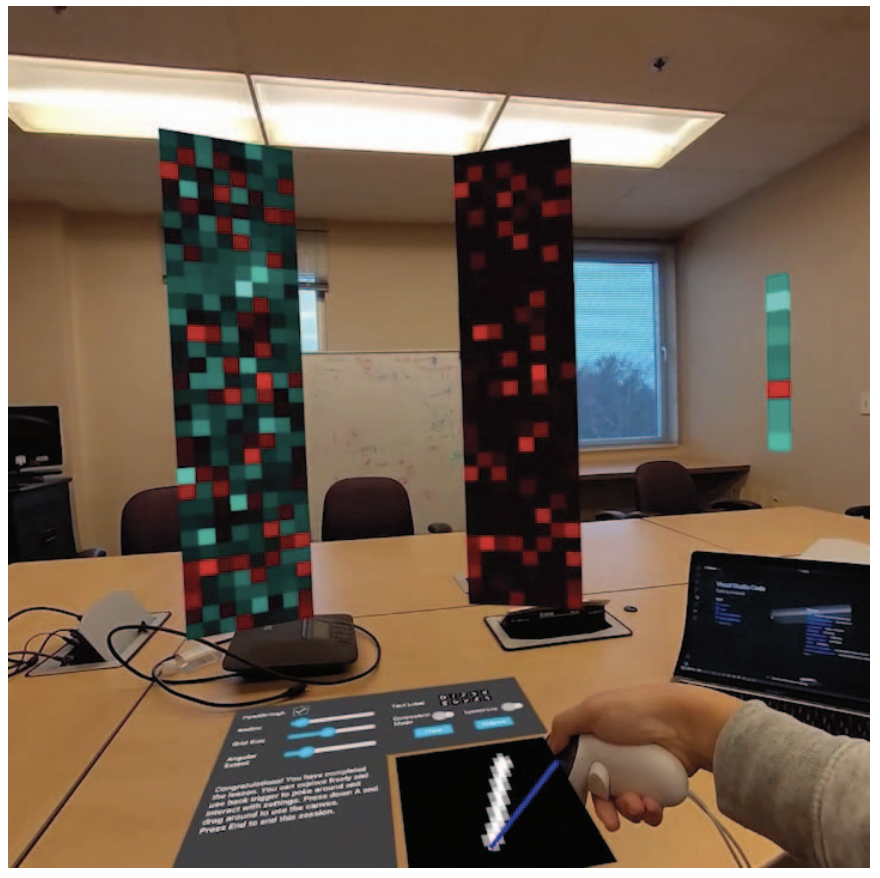

This project aims to allow a user of a VR application not only to see, but also to touch virtual objects. The project explores the complemetary approaches of modifying the virtual world to align it with the physical world, using redirection algorithms, and of modifying the physical world to align it with the virtual world, using encountered-type haptic devices, or ETHDs.

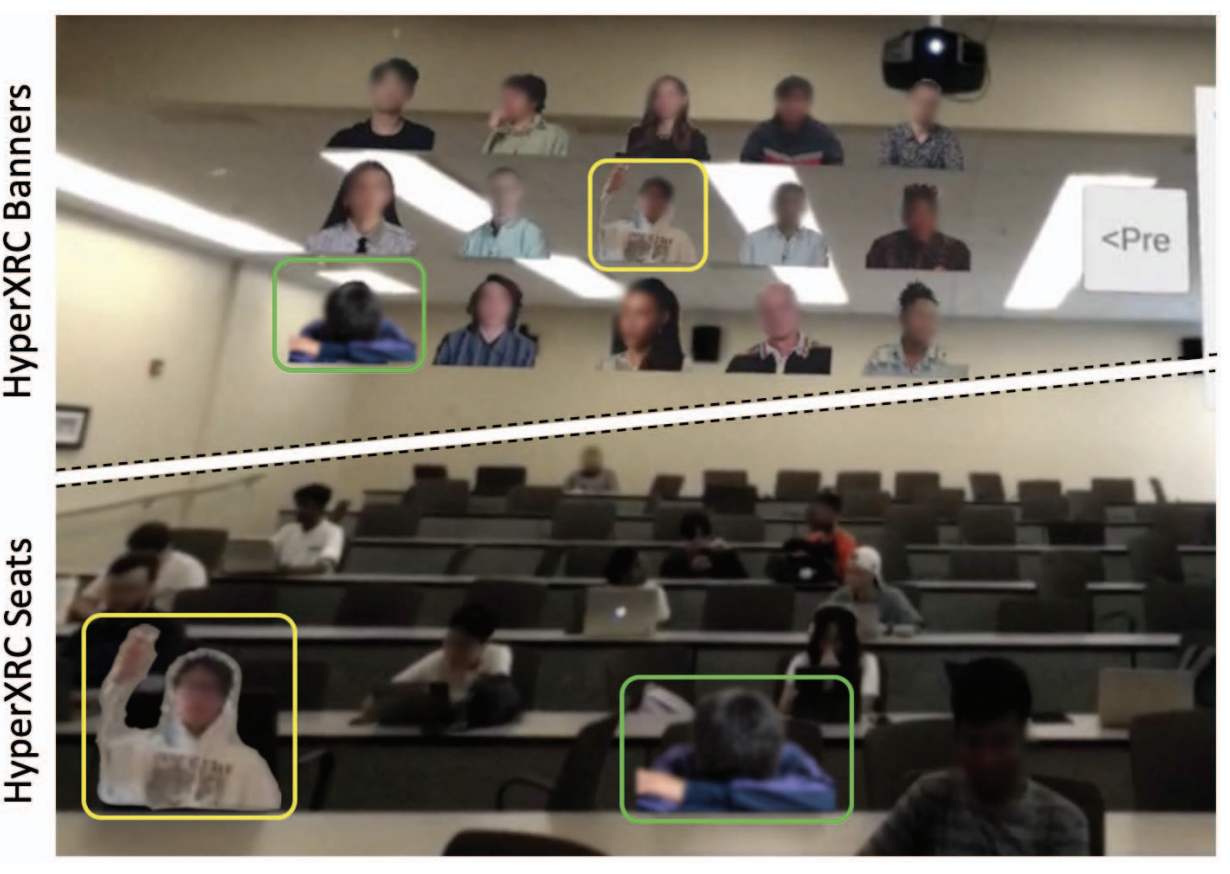

This project aims to bring the benefits of immersive visualization to education, for example to scaffold learning in AI, or to increase student engagement in large lectures.

This project aims to leverage XR's ability to abstract away geoegraphic distances between remote collaborators. In the distance education context, the project aims to allow remote students to attend on-campus study groups and lectures. The primary design concerns are effectiveness, i.e., the remote students should benefit from these learning activities as if they were on campus, and unobtrusiveness, i.e., hosting the remote students should not come at the cost of a lesser learning activity for the students on campus.

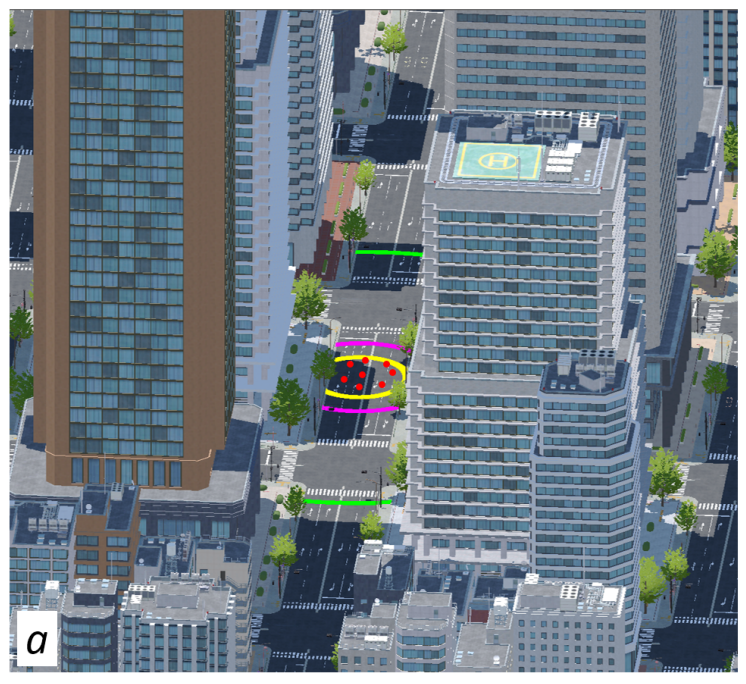

This project aims to make complex virtual environments tractable on thin XR clients, such as all-in-one XR headsets. The project investigates distributed VR systems with multiple clients supported by an edge server.