Are you happy, sad, or angry? This algorithm knows.

11-22-2022

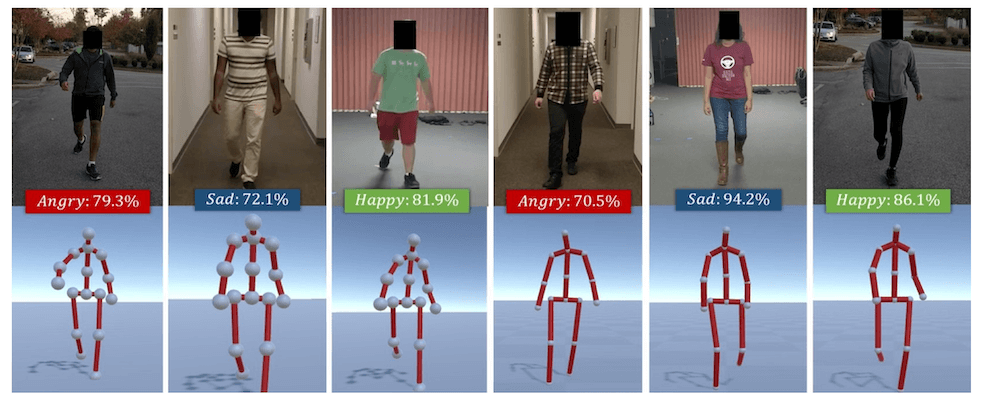

Figure 1: Modeling Perceived Emotions: A novel algorithm to model the perceived emotions of individuals based on their gaits for AR/VR applications. Given RGB videos of peoples’ walks (top), we extract their gaits as 3D pose sequences (bottom). Authors used a combination of deep features learned via an LSTM and affective features computed using posture and movement cues to model those gaits into categorical emotions (e.g., happy, sad, angry, neutral).

Associate Professor Aniket Bera and his IDEAS lab developed a novel data-driven algorithm to learn emotions based on individuals’ walking gait.

Emotions are part of our everyday lives.

Emotions convey to the world how we are feeling and provide cues to others on how best to communicate with us. These inherently human perceptions can also be learned by AI algorithms allowing technology to recognize perceived emotions.

Bera and team developed a novel data-driven algorithm to learn the perceived emotions of individuals based on walking motions or gaits. To do so, they built an AI model to learn from psychology-driven “features” of individuals walking from thousands of videos.. The goal was to exploit the gait features to learn and model the individual's emotional state into an emotion or affect spectrum emotions, either in terms of Valence-Arousal-Dominance or happy, sad, angry, or neutral.

For their work, Principal Investigator Aniket Bera, Associate Professor at Purdue University’s Department of Computer Science, and collaborators from Departments of Psychology and Computer Science at University of North Carolina at Chapel Hill and the University of Maryland at College Park (Tanmay Randhavane, Uttaran Bhattacharya, Pooja Kabra, Kyra Kapsaskis, Kurt Gray, Dinesh Manocha) were awarded best paper at the 15th Annual ACM SIGGRAPH MIG in November 2022.

This algorithm uses deep features learned using long short-term memory networks on large-scale datasets with labeled gaits. The researchers also combined the deep features with affective features developed based on prior work in social psychology relating to posture and movement measures to capture both a sense of "body language" and motion characteristics.

Compared to other state-of-the-art algorithms, the method Bera and his team developed achieves an overall classification accuracy of 80.07% on the EWalk dataset, improving on the current baselines by an absolute 13–24%.

“Our work encompasses disciplines, such as AI, engineering, psychology, education, and cognitive science, just to make our systems more behaviorally aware and emotionally intelligent,” said Bera.

Researchers at Bera’s IDEAS research lab at Purdue are already working on using this gait-based behavior-learning research in other areas like social and affective robotics, autonomous driving and VR/AR applications (virtual character/avatar generation), mental health detection technologies, etc., and other potential applications and benefits of AI for improving Public Health. Bera is also working on using such human motion or gait-based research in areas such as aging or geriatric rehabilitation and depression detection by linking the relationships between mental health, age, diseases, and gait-based emotions. The IDEAS Research Group has published many papers on these applications with broader impact, available at: https://ideas.cs.purdue.edu/research/.

What’s next

For future research, Bera and team want to focus on combining their methods with other behavior modeling or causal-learning algorithms that use human speech and facial expressions.

“Imagine if computers can not only see you but also understand how you feel,” said Bera. He added, “Affective computing has the potential to humanize digital interactions and offer benefits in an almost limitless range of applications ranging from mental healthcare to immersive experiences to even social robotics.”

ABSTRACT

Learning Gait Emotions Using Affective and Deep Features

Tanmay Randhavane, Uttaran Bhattacharya, Pooja Kabra, Kyra Kapsaskis, Kurt Gray, Dinesh Manocha, Aniket Bera

We present a novel data-driven algorithm to learn the perceived emotions of individuals based on their walking motion or gaits. Given an RGB video of an individual walking, we extract their walking gait as a sequence of 3D poses. Our goal is to exploit the gait features to learn and model the emotional state of the individual into one of four categorical emotions: happy, sad, angry, or neutral. Our perceived emotion identification approach uses deep features learned using long short-term memory networks (LSTMs) on datasets with labeled emotive gaits. We combine these features with gait-based affective features consisting of posture and movement measures. Our algorithm identifies both the categorical emotions from the gaits and the corresponding values for the dimensional emotion components - valence and arousal. We also introduce and benchmark a dataset called Emotion Walk (EWalk), consisting of videos of gaits of individuals annotated with emotions. We show that our algorithm mapping the combined feature space to the perceived emotional state provides an accuracy of 80.07% on the EWalk dataset, outperforming the current baselines by an absolute 13–24%.

About the 15th Annual ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG 2022)

ACM SIGRRAPH is a special interest group of the ACM. It is comprised of researchers, artists, developers, filmmakers, scientists and business professionals who specialize in computer graphics and interactive techniques. The goal of the ACM SIGGRAPH MIG conference is to bring together researchers in a variety of fields to present work and initiate collaborations on motion as applied to game technology, robotics, simulation, and computer vision, as well as physics, psychology and urban studies.

About the Department of Computer Science at Purdue University

Founded in 1962, the Department of Computer Science was created to be an innovative base of knowledge in the emerging field of computing as the first degree-awarding program in the United States. The department continues to advance the computer science industry through research. US News & Reports ranks Purdue CS #20 and #16 overall in graduate and undergraduate programs respectively, seventh in cybersecurity, 10th in software engineering, 13th in programming languages, data analytics, and computer systems, and 19th in artificial intelligence. Graduates of the program are able to solve complex and challenging problems in many fields. Our consistent success in an ever-changing landscape is reflected in the record undergraduate enrollment, increased faculty hiring, innovative research projects, and the creation of new academic programs. The increasing centrality of computer science in academic disciplines and society, and new research activities - centered around data science, artificial intelligence, programming languages, theoretical computer science, machine learning, and cybersecurity - are the future focus of the department. cs.purdue.edu

Writer: Emily Kinsell, emily@purdue.edu

Source: Aniket Bera, ab@cs.purdue.edu